The triumph of generic databases

The computerization of commonsense knowledge goes back at least to Ross Quillian’s paper from the 1969 book Semantic Information Processing. Ross used methods that aren’t that different from what I use today, but he was able to store just a few hundred concepts in his computer.

The Cyc project, starting in the 1980s contained about 3 million facts. It was successful on it’s own terms, but it didn’t lead to the revolution in natural language processing that it promised. WordNet, from the same era, documents about 120,000 word senses, but like Cyc, hasn’t had a large engineering impact.

DBpedia and Freebase have become popular lately, I think because they’re a lot like traditional databases in character. For a person, place or creative work you’ve got the information necessary to make a ‘Pokemon card’ about the topic. With languages like SPARQL and MQL it’s possible to write queries you’d write in a relational database, so people have an idea what to do with it.

DBpedia and Freebase are much larger than the old commonsense databases. The English Dbpedia contains 4 million topics derived from Wikipedia pages and Freebase contains 24 million facts about 600 million topics. It’s hard to quantify it, but subjectively, people feel like Wikipedia contains most of the concepts that turn up when they are reading or thinking about things. Because the new generic databases are about concepts rather than words, they are inherently multilingual.

DBpedia Spotlight is the first of a breed of language processing products that use world knowledge instead of syntactic knowledge. Using a knowledge base created from DBpedia and Wikipedia, Spotlight gets accuracy comparable to commercial named entity recognition systems — although Spotlight uses simple methods and, so far, has made little of the effort a commercial system would to systematically improve accuracy.

pain points

The greatest difficulty with generic databases today is documentation. :BaseKB, derived from Freebase, has roughly 10,000 classes and 100,000 properties. Finding what you’re looking for, and even knowing if it is available, can be difficult.

Data quality is another problem, but it’s hard to characterize. The obvious problem is that you write queries and get wrong answers. If, for instance, you ask for the most important cities in the world, you might find that some big ones are missing but that some entities other than cities, such as countries, have been mistyped. If you look for the people with the latest birthdates, some may be in the future — some may be wrong, some may be anticipated, and others could be mistyped science fiction characters.

Quality problems can have an impact beyond their rate of incidence. Wikipedia, for instance, contains about 200,000 pages documenting the tree of biological classification. Wikipedians don’t have real tools for editing hierarchies, so any edit they make to the tree could break the structure of the tree as a whole. Even if tree-breaking errors occurred at a rate of 1-in-50,000 it would be impossible (or incorrect) to use algorithms on this tree that assume it is really a tree.

Quality problems of this sort can be found by systematic testing and evaluation — these can be repaired at the source or in a separate data transformation step.

intelligence without understanding

One answer to the difficulties of documentation that use algorithms that use predicates wholesale; one might filter or weight predicates, but not get two concerned about the exact meaning of any particular predicate. Systems like this can make subjective judgments about importance, offensiveness, and other attributes of topics. The best example of this is seevl.net a satisfying music recommendation engine developed with less than 10% of the expense and effort that goes into something like Last.FM or Pandora.

These approaches are largely unsupervised and work well because the quality (information density) of semantic data is higher than data about keywords, votes, and other inputs to social-IR system. Starting with a good knowledge base, the “cold start” problem in collaborative filtering can be largely obliterated.

beyond predicates

In the RDF model we might make a statement like

:Robert_Redford :actedIn :Sneakers .

we’ve yet to have a satisfactory way to say things about this relationship. Was he the star of this movie? Did he play a minor part? Who added this fact? How must do we trust it?

These issues are particularly important for a data Wiki, like Freebase, because provenance information is crucial if multiple people are putting facts into a system.

These issues are also important in user interfaces because, if we know thousands of facts about a topic, we probably can’t present them all, and if we did, we can’t present them all equally. For an actor, for instance, we’d probably want to show the movies they starred in, not the movies they played a minor role in.

Google is raising the standard here: this article shows how the Google Knowledge Graph can convert the “boiling point” predicate into a web search and sometimes shows the specific source of a fact.

This points to a strategy for getting the missing information about statements — if we have a large corpus of documents (such as a web crawl) we search that corpus for sentences that discuss a fact. Presumably important facts get talked about more frequently than unimportant facts, and this can be used as a measure for importance.

I’ll point out that Common Crawl is a freely available web crawl hosted in Amazon S3 and that by finding links to Wikipedia and official web sites one can build a corpus that’s linked to Dbpedia and Freebase concepts.

lexical data

Although Freebase and DBpedia know of many names for things, they lack detailed lexical data. For instance, if you’re synthesizing text, you need to know something about the labels you’re using so you can conjugate verbs, make plurals, and use the correct article. Things are relatively simple in English, but more complex in languages like German. Parts-of-speech tags on the labels would help with text analysis.

YAGO2 is an evolving effort to reconcile DBpedia’s concept database with WordNet; it’s one of the few large-scale reconciliations that has been statistically evaluated and was found to be 95% accurate. YAGO2′s effectiveness has been limited, however, by WordNet’s poor coverage compared with DBpedia. There’s a need for a lexical extension to DBpedia and Freebase that’s based on a much larger dictionary than WordNet.

relationship with machine learning

I was involved with the text classification with the Support Vector Machine around 2003 and expected to see the technology to become widely used. In our work we found we got excellent classification performance when we had large (10,000 document) training sets, but we got poor results with 50 document sets.

Text classification hasn’t been so commercially successful and I think that’s because few users will want to classify 10,000 documents to train a classifier, and even in large organizations, many classes of documents won’t be that large (when you get more documents, you slice them into more categories.)

I think people learn efficiently because they have a large amount of background knowledge that helps them make hypotheses that work better than chance. If we’re doing association mining between a million signals, there are a trillion possible associations. If we know that some relationships are more plausible than others, we can learn more quickly. With large numbers of named relationships, it’s possible that we can discover patterns and give them names, in contrast to the many unsupervised algorithms that discover real patterns but can’t explain what they are.

range of application

Although generic databases seem very general, people I talk to are often concerned that they won’t cover terms they need, and some cases they are right.

If a system is parsing notes taken in an oil field, for instance, the entities involved are specific to a company — Robert Redford and even Washington, DC just aren’t relevant.

Generic databases apply obviously to media and entertainment. Fundamentally, the media talks about things that are broadly known, and things that are broadly known are in Wikipedia. Freebase adds extensive metadata for music, books, tv and movies, so that’s all the better for entertainment.

It’s clear that there are many science and technology topics in Wikipedia in Freebase. Freebase has more than 10,000 chemical compounds and 38,000 genes. Wikipedia documents scientific and technological concepts in great detail, so there are certainly the foundations for a science and technology knowledge base there — evaluating how good of a foundation this is and how to improve it is a worthwhile topic.

web and enterprise search

schema.org, by embedding semantic data, makes radical web browsing and search. Semantic labels applied to words can be resolved to graph fragments in DBpedia, Freebase and similar databases. A system that constructs a search index based on concepts rather than words could be effective at answering many kinds of queries. The same knowledge base could be processed into something like the Open Directory, but better, because it would not be muddled and corrupted by human editors.

Concept-based search would be effective for the enterprise as well, where it’s necessary to find things when people use different (but equivalent) words in search than exist in the document. Concept-based search can easily be made strongly bilingual.

middle and upper ontologies

It is exciting today to see so many knowledge bases making steady improvements. Predicates in Freebase, for instance, are organized into domains (“music”) and further organized into 46 high level patterns such as “A created B”, “A administrates B” and so forth. This makes it possible to tune weighting functions in an intuitive, rule-based, manner.

Other ontologies, like UMBEL, are reconciled with generic databases but reflect a fundamentally different point of view. For instance, UMBEL documents human anatomy in much better detail than Freebase so it could play a powerful supplemental role.

conclusion

generic databases, when brought into confrontation with document collections, can create knowledge bases that enable computers to engage with text in unprecedented depth. They’ll not only improve search, but also open a world of browsing and querying languages that will let users engage with data and documents in entirely new ways.

]]>

Introduction

Languages such as LISP, ML, oCaml F# and Scala have supported first-class functions for a long time. Functional programming features are gradually diffusing into mainstream languages such as C#, Javascript and PHP. In particular, Lambda expressions, implicit typing, and delegate autoboxing have made C# 3.0 an much more expressive language than it’s predecssors.

In this article, I develop a simple function that acts on functions: given a boolean function f, F.Not(f) returns a new boolean function which is the logical negation of f. (That is, F.Not(f(x)) == !f(x)). Although the end function is simple to use, I had to learn a bit about the details of C# to ge tthe behavior I wanted — this article records that experience.

Why?

I was debugging an application that uses Linq-to-Objects the other day, and ran into a bit of a pickle. I had written an expression that would return true if all of the values in a List<String> were valid phone numbers:

[01] dataColumn.All(Validator.IsValidPhoneNumber);

I was expecting the list to validate successfully, but the function was returning false. Obviously at least one of the values in the function was not validating — but which one? I tried using a lambda expression in the immediate window (which lets you type in expression while debugging) to reverse the order of matching:

[02] dataColumn.Where(s => !ValidatorIsValidPhoneNumber(x));

but that gave me the following error message:

[03] Expression cannot contain lambda expressions

(The expression compiler used in the immediate window doesn’t support all of the language features supported by the full C# compiler.) I was able to answer find the non matching elements using the set difference operator

[04] dataColumn.Except(dataColumn.Where(Validator.IsValidPhoneNumber).ToList()

but I wanted an easier and more efficient way — with a logical negation function, I could just write

[05] dataColumn.Except(F.Not(Validator.IsValidPhoneNumber).ToList();

Try 1: Extension Method

I wanted to make the syntax for negation as simple as possible. I didn’t want to even have to name a class eliminate the need to name a class, so I tried the following extension method:

[06] static class FuncExtensions {

[07] public static Func<Boolean> Not(this Func<Boolean> innerFunction) {

[08] return () => innerFunction();

[09] }

[10] ...

[11] }

I was hoping that, given a function like

[12] public static boolean AlwaysTrue() { return true };

that I could negate the function by writing

[13] AlwaysTrue.Not()

Unfortunately, it doesn’t work that way: the extension method can only be called on a delegate of type Func<Boolean>. Although the compiler will “autobox” function references to delegates in many situations, it doesn’t do it when you reference an extension method. I could write

[14] (Func<Boolean> AlwaysTrue).Not()

but that’s not a pretty syntax. At that point, I tried another tack.

Try 2: Static Method

Next, I defined a set of negation functions as static methods on a static class:

[15] public static class F {

[16] public static Func<Boolean> Not(Func<Boolean> innerFunction) {

[17] return () => !innerFunction();

[18] }

[19]

[20] public static Func<T1,Boolean> Not<T1>(

[21] Func<T1,Boolean> innerFunction) {

[22] return x =>!innerFunction(x);

[23] }

[24]

[25] public static Func<T1, T2,Boolean> Not<T1,T2>(

[26] Func<T1, T2,Boolean> innerFunction) {

[27] return (x,y) => !innerFunction(x,y);

[28] }

[29]

[30] public static Func<T1, T2, T3, Boolean> Not<T1,T2,T3>(

[31] Func<T1, T2, T3, Boolean> innerFunction) {

[32] return (x, y, z) => !innerFunction(x, y, z);

[33] }

[34]

[35] public static Func<T1, T2, T3, T4, Boolean> Not<T1, T2, T3, T4>(

[36] Func<T1, T2, T3, T4,Boolean> innerFunction) {

[37] return (x, y, z, a) => !innerFunction(x, y, z, a);

[38] }

[39] }

Now I can write

[40] F.Not(AlwaysTrue)() // always == false

or

[41] Func<int,int,int,int,Boolean> testFunction = (a,b,c,d) => (a+b)>(c+d) [42] F.Not(testFunction)(1,2,3,4)

which is sweet — the C# compiler now automatically autoboxes the argument to F.Not on line [40]. Note two details of how type inference works here:

- The compiler automatically infers the type parameters of F.Not() by looking at the arguments of the innerFunction. If it didn’t do that, you’d need to write

F.Not<int,int,int,int>(testFunction)(1,2,3,4)

which would be a lot less fun.

- On line [40], note that the compiler derives the types of the parameters a,b,c, and d using the type declaration on the right hand side (RHS)

Func<int,int,int,int,Boolean>

you can’t write var on the RHS in this situation because that doesn’t give the compiler information about the parameters and return values of the lambda.

Although good things are happening behind the scenes, there are also two bits of ugliness:

- I need to implement F.Not() 5 times to support functions with 0 to 4 parameters: once I’ve done that, however, the compiler automatically resolves the overloading and picks the right version of the function.

- The generic Func<> and Action<> delegates support at most 4 parameters. Although it’s certainly true that functions with a large number of parameters can be difficult to use and maintain (and should be discouraged), this is a real limitation.

Extending IEnumerable<T>

One of the nice things about Linq is that you can extend it by adding new extension methods to IEnumerable. Not everbody agrees, but I’ve always liked the unless() satement in Perl, which is equivalent to if(!test):

[42] unless(everything_is_ok()) {

[43] abort_operation;

[44] }

A replacement for the Where(predicate) method that negates the predicate function would be convenient my debugging problem:

I built an Unless() extension method that combines Where() with F.Not():

[45] public static IEnumerable<T> Unless<T>(

[46] this IEnumerable<T> input, Func<T, Boolean> fn) {

[47] return input.Where(F.Not(fn));

[48] }

Now I can write

[49] var list = new List<int>() { 1, 2, 3, 4, 5, 6, 7, 8, 9, 10 };

[50] var filtered = list.Unless(i => i > 3).ToList();

and get the result

[51] filtered = { 1,2,3 }

Conclusion

New features in C# 3.0 make it an expressive language for functional programming. Although the syntax of C# isn’t quite as sweet as F# or Scala, a programmer who works with the implicit typing and autoboxing rules of the compiler can create functions that act on functions that are easy to use — in this article we develop a set of functions that negate boolean functions and apply this to add a new restriction method to IEnumerable<T>.

]]>

Freebase is a open database of things that exist in the world: things like people, places, songs and television shows. As of the January 2009 dump, Freebase contained about 241 million facts, and it’s growing all the time. You can browse it via the web and even edit it, much like Wikipedia. Freebase also has an API that lets programs add data and make queries using a language called MQL. Freebase is complementary to DBpedia and other sources of information. Although it takes a different approach to the semantic web than systems based on RDF standards, it interoperates with them via linked data.

The January 2009 Freebase dump is about 500 MB in size. Inside a bzip-compressed files, you’ll find something that’s similar in spirit to a Turtle RDF file, but is in a simpler format and represents facts as a collection of four values rather than just three.

Your Own Personal Freebase

To start exploring and extracting from Freebase, I wanted to load the database into a star schema in a mysql database — an architecture similar to some RDF stores, such as ARC. The project took about a week of time on a modern x86 server with 4 cores and 4 GB of RAM and resulted in a 18 GB collection of database files and indexes.

This is sufficient for my immediate purposes, but future versions of Freebase promise to be much larger: this article examines the means that could be used to improve performance and scalability using parallelism as well as improved data structures and algorithms.

I’m interested in using generic databases such as Freebase and Dbpedia as a data source for building web sites. It’s possible to access generic databases through APIs, but there are advantages to having your own copy: you don’t need to worry about API limits and network latency, and you can ask questions that cover the entire universe of discourse.

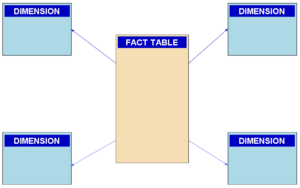

Many RDF stores use variations of a format known as a Star Schema for representing RDF triples; the Star Schema is commonly used in data warehousing application because it can efficiently represent repetitive data. Freebase is similar to, but not quite an RDF system. Although there are multiple ways of thinking about Freebase, the quarterly dump files provided by Metaweb are presented as quads: groups of four related terms in tab-delimited terms. To have a platform for exploring freebase, I began a project of loading Freebase into a Star Schema in a relational database.

A Disclaimer

Timings reported in this article are approximate. This work was done on a server that was doing other things; little effort was made to control sources of variation such as foreign workload, software configuration and hardware characteristics. I think it’s orders of magnitude that matter here: with much larger data sets becoming available, we need tools that can handle databases 10-100 times as big, and quibbling about 20% here or there isn’t so important. I’ve gotten similar results with the ARC triple store. Some products do about an order of magnitude better: the Virtuoso server can load DBpedia, a larger database, in about 16 to 22 hours on a 16 GB computer: several papers on RDF store performance are available [1] [2] [3]. Although the system described in this paper isn’t quite an RDF store, it’s performance is comprable to a relatively untuned RDF store.

It took about a week of calendar time to load the 241 million quads in the January 2009 Freebase into a Star Schema using a modern 4-core web server with 4GB of RAM; this time could certainly be improved with microoptimizations, but it’s in the same range that people are observing that it takes to load 10^8 triples into other RDF stores. (One product is claimed to load DBPedia, which contains about 100 million triples, in about 16 hours with “heavy-duty hardware”.) Data sets exceeding 10^9 triples are becoming rapidly available — these will soon exceed what can be handled with simple hardware and software and will require new techniques: both the use of parallel computers and optimized data structures.

The Star Schema

In a star schema, data is represented in separate fact and dimension tables,

all of the rows in the fact table (quad) contain integer keys — the values associated with the keys are defined in dimension tables (cN_value). This efficiently compresses the data and indexes for the fact table, particularly when the values are highly repetitive.

I loaded data into the following schema:

create table c1_value ( id integer primary key auto_increment, value text, key(value(255)) ) type=myisam; ... identical c2_value, c3_value and c4_value tables ... create table quad ( id integer primary key auto_increment, c1 integer not null, c2 integer not null, c3 integer not null, c4 integer not null ) type=myisam;

Although I later created indexes on c1, c2, c3, and c4 in the quad table, I left unnecessary indexes off of the tables during the loading process because it’s more efficient to create indexes after loading data in a table. The keys on the value fields of the dimension tables are important, because the loading process does frequent queries to see if values already exist in the dimension table. The sequentially assigned id in the quad field isn’t necessary for many applications, but it gives each a fact a unique identity and makes the system aware of the order of facts in the dump file.

The Loading Process

The loading script was written in PHP and used a naive method to build the index incrementally. In pseudo code it looked something like this:

function insert_quad($q1,$q2,$q3,$q4) {

$c1=get_dimension_key(1,$q1);

$c2=get_dimension_key(2,$q2);

$c3=get_dimension_key(3,$q3);

$c4=get_dimension_key(4,$q4);

$conn->insert_row("quad",null,$c1,$c2,$c3,$c4)

}

function get_dimension_key($index,$value) {

$cached_value=check_cache($value);

if ($cached_value)

return $cached_value;

$table="$c{$index}_value";

$row=$conn->fetch_row_by_value($table,$value);

if ($row)

return $row->id;

$conn->insert_row($table,$value);

return $conn->last_insert_id

};

Caching frequently used dimension values improves performance by a factor of five or so, at least in the early stages of loading. A simple cache management algorithm, clearing the cache every 500,000 facts, controls memory use. Timing data shows that a larger cache or better replacement algorithm would make at most an increment improvement in performance. (Unless a complete dimension table index can be held in RAM, in which case all read queries can be eliminated.)

I performed two steps after the initial load:

- Created indexes on quad(c1), quad(c2), quad(c3) and quad(c4)

- Used myisam table compression to reduce database size and improve performance

Loading Performance

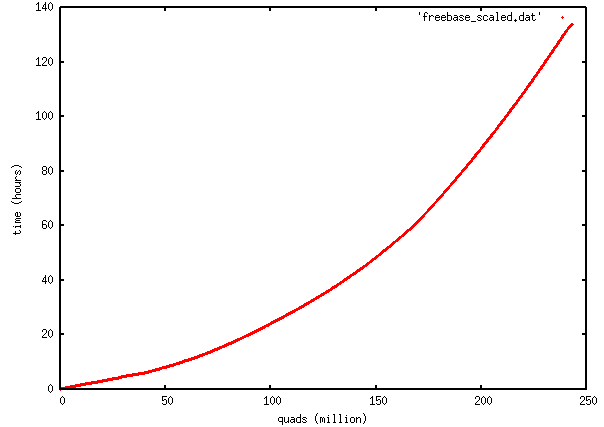

It took about 140 hours (nearly 6 days) to do the initial load. Here’s a graph of facts loaded vs elapsed time:

The important thing Iabout this graph is that it’s convex upward: the loading process slows down as the number of facts increases. The first 50 quads are loaded at a rate of about 6 million per hour; the last 50 are loaded at a rate of about 1 million per hour. An explanation of the details of the curve would be complex, but log N search performance of B-tree indexes and the ability of the database to answer queries out of the computer’s RAM cache would be significant. Generically, all databases will perform the same way, becoming progressively slower as the size of the database increases: you’ll eventually reach a database size where the time to load the database becomes unacceptable.

The process of constructing b-tree indexes on the mysql tables took most of a day. On average it took about four hours to construct a b-tree index on one column of quad:

mysql> create index quad_c4 on quad(c4); Query OK, 243098077 rows affected (3 hours 40 min 50.03 sec) Records: 243098077 Duplicates: 0 Warnings: 0

It took about an hour to compress the tables and rebuild indexes, at which point the data directory looks like:

-rw-r----- 1 mysql root 8588 Feb 22 18:42 c1_value.frm -rw-r----- 1 mysql root 713598307 Feb 22 18:48 c1_value.MYD -rw-r----- 1 mysql root 557990912 Feb 24 10:48 c1_value.MYI -rw-r----- 1 mysql root 8588 Feb 22 18:56 c2_value.frm -rw-r----- 1 mysql root 485254 Feb 22 18:46 c2_value.MYD -rw-r----- 1 mysql root 961536 Feb 24 10:48 c2_value.MYI -rw-r----- 1 mysql root 8588 Feb 22 18:56 c3_value.frm -rw-r----- 1 mysql root 472636380 Feb 22 18:51 c3_value.MYD -rw-r----- 1 mysql root 370497536 Feb 24 10:51 c3_value.MYI -rw-r----- 1 mysql root 8588 Feb 22 18:56 c4_value.frm -rw-r----- 1 mysql root 1365899624 Feb 22 18:44 c4_value.MYD -rw-r----- 1 mysql root 1849223168 Feb 24 11:01 c4_value.MYI -rw-r----- 1 mysql root 65 Feb 22 18:42 db.opt -rw-rw---- 1 mysql mysql 8660 Feb 23 17:16 quad.frm -rw-rw---- 1 mysql mysql 3378855902 Feb 23 20:08 quad.MYD -rw-rw---- 1 mysql mysql 9927788544 Feb 24 11:42 quad.MYI

At this point it’s clear that the indexes are larger than the actual databases: note that c2_value is much smaller than the other tables because it holds a relatively small number of predicate types:

mysql> select count(*) from c2_value; +----------+ | count(*) | +----------+ | 14771 | +----------+ 1 row in set (0.04 sec) mysql> select * from c2_value limit 10; +----+-------------------------------------------------------+ | id | value | +----+-------------------------------------------------------+ | 1 | /type/type/expected_by | | 2 | reverse_of:/community/discussion_thread/topic | | 3 | reverse_of:/freebase/user_profile/watched_discussions | | 4 | reverse_of:/freebase/type_hints/included_types | | 5 | /type/object/name | | 6 | /freebase/documented_object/tip | | 7 | /type/type/default_property | | 8 | /type/type/extends | | 9 | /type/type/domain | | 10 | /type/object/type | +----+-------------------------------------------------------+ 10 rows in set (0.00 sec)

The total size of the mysql tablespace comes to about 18GB, anexpansion of about 40 times relative to the bzip2 compressed dump file.

Query Performance

After all of this trouble, how does it perform? Not too bad if we’re asking a simple question, such as pulling up the facts associated with a particular object

mysql> select * from quad where c1=34493; +---------+-------+------+---------+--------+ | id | c1 | c2 | c3 | c4 | +---------+-------+------+---------+--------+ | 2125876 | 34493 | 11 | 69 | 148106 | | 2125877 | 34493 | 12 | 1821399 | 1 | | 2125878 | 34493 | 13 | 1176303 | 148107 | | 2125879 | 34493 | 1577 | 69 | 148108 | | 2125880 | 34493 | 13 | 1176301 | 148109 | | 2125881 | 34493 | 10 | 1713782 | 1 | | 2125882 | 34493 | 5 | 1174826 | 148110 | | 2125883 | 34493 | 1369 | 1826183 | 1 | | 2125884 | 34493 | 1578 | 1826184 | 1 | | 2125885 | 34493 | 5 | 66 | 148110 | | 2125886 | 34493 | 1579 | 1826185 | 1 | +---------+-------+------+---------+--------+ 11 rows in set (0.05 sec)

Certain sorts of aggregate queries are reasonably efficient, if you don’t need to do them too often: we can look up the most common 20 predicates in about a minute:

select

(select value from c2_value as v where v.id=q.c2) as predicate,count(*)

from quad as q

group by c2

order by count(*) desc

limit 20;

+-----------------------------------------+----------+ | predicate | count(*) | +-----------------------------------------+----------+ | /type/object/type | 27911090 | | /type/type/instance | 27911090 | | /type/object/key | 23540311 | | /type/object/timestamp | 19462011 | | /type/object/creator | 19462011 | | /type/permission/controls | 19462010 | | /type/object/name | 14200072 | | master:9202a8c04000641f800000000000012e | 5541319 | | master:9202a8c04000641f800000000000012b | 4732113 | | /music/release/track | 4260825 | | reverse_of:/music/release/track | 4260825 | | /music/track/length | 4104120 | | /music/album/track | 4056938 | | /music/track/album | 4056938 | | /common/document/source_uri | 3411482 | | /common/topic/article | 3369110 | | reverse_of:/common/topic/article | 3369110 | | /type/content/blob_id | 1174046 | | /type/content/media_type | 1174044 | | reverse_of:/type/content/media_type | 1174044 | +-----------------------------------------+----------+ 20 rows in set (43.47 sec)

You’ve got to be careful how you write your queries: the above query with the subselect is efficient, but I found it took 5 hours to run when I joined c2_value with quad and grouped on value. A person who wishes to do frequent aggregate queries would find it most efficient to create a materialized views of the aggregates.

Faster And Large

It’s obvious that the Jan 2009 Freebase is pretty big to handle with the techniques I’m using. One thing I’m sure of is that that Freebase will be much bigger next quarter — I’m not going to do it the same way again. What can I do to speed the process up?

Don’t Screw Up

This kind of process involves a number of lengthy steps. Mistakes, particularly if repeated, can waste days or weeks. Although services such as EC2 are a good way to provision servers to do this kind of work, the use of automation and careful procedures is key to saving time and money.

Partition it

Remember how the loading rate of a data set decreases as the size of the set increase? If I could split the data set into 5 partitions of 50 M quads each, I could increase the loading rate by a factor of 3 or so. If I can build those 5 partitions in parallel (which is trivial), I can reduce wallclock time by a factor of 15.

Eliminate Random Access I/O

This loading process is slow because of the involvement of random access disk I/O. All of Freebase canbe loaded into mysql with the following statement,

LOAD DATA INFILE ‘/tmp/freebase.dat’ INTO TABLE q FIELDS TERMINATED BY ‘\t’;

which took me about 40 minutes to run. Processes that do a “full table scan” on the raw Freebase table with a grep or awk-type pipeline take about 20-30 minutes to complete. Dimension tables can be built quickly if they can be indexed by a RAM hasthable. The process that builds the dimension table can emit a list of key values for the associated quads: this output can be sequentially merged to produce the fact table.

Bottle It

Once a data source has been loaded into a database, a physical copy of the database can be made and copied to another machine. Copies can be made in the fraction of the time that it takes to construct the database. A good example is the Amazon EC2 AMI that contains a preinstalled and preloaded Virtuoso database loaded with billions of triples from DBPedia, MusicBrainz, NeuroCommons and a number of other databases. Although the process of creating the image is complex, a new instance can be provisioned in 1.5 hours at the click of a button.

Compress Data Values

Unique object identifiers in freebase are coded in an inefficient ASCII representation:

mysql> select * from c1_value limit 10; +----+----------------------------------------+ | id | value | +----+----------------------------------------+ | 1 | /guid/9202a8c04000641f800000000000003b | | 2 | /guid/9202a8c04000641f80000000000000ba | | 3 | /guid/9202a8c04000641f8000000000000528 | | 4 | /guid/9202a8c04000641f8000000000000836 | | 5 | /guid/9202a8c04000641f8000000000000df3 | | 6 | /guid/9202a8c04000641f800000000000116f | | 7 | /guid/9202a8c04000641f8000000000001207 | | 8 | /guid/9202a8c04000641f80000000000015f0 | | 9 | /guid/9202a8c04000641f80000000000017dc | | 10 | /guid/9202a8c04000641f80000000000018a2 | +----+----------------------------------------+ 10 rows in set (0.00 sec)

These are 38 bytes apiece. The hexadecimal part of the guid could be represented in 16 bytes in a binary format, and it appears that about half of the guid is a constant prefix that could be further excised.

A similar efficiency can be gained in the construction of in-memory dimension tables: md5 or sha1 hashes could be used as proxies for values.

The freebase dump is littered with “reverse_of:” properties which are superfluous if the correct index structures exist to do forward and backward searches.

Parallelize it

Loading can be parallelized in many ways: for instance, the four dimension tables can be built in parallel. Dimension tables can also be built by a sorting process that can be performed on a computer cluster using map/reduce techniques. A cluster of computers can also store a knowledge base in RAM, trading sequential disk I/O for communication costs. Since the availability of data is going to grow faster than the speed of storage systems, parallelism is going to become essential for handling large knowledge bases — an issue identified by Japanese AI workers in the early 1980′s.

Cube it?

Some queries benefit from indexes built on combinations of tables, such as

CREATE INDEX quad_c1_c2 ON quad(c1,c2);

there are 40 combinations of columns on which an index could be useful — however, the cost in time and storage involved in creating those indexes would be excessively expensive. If such indexes were indeed necessary, a Multidimensional database can create a cube index that is less expensive than a complete set of B-tree indexes.

Break it up into separate tables?

It might be anathema to many semweb enthusiasts, but I think that Freebase (and parts of Freebase) could be efficiently mapped to conventional relational tables. That’s because facts in Freebase are associated with types, see, for instance, Composer from the Music Commons. It seems reasonable to map types to relational tables and to create satellite tables to represent many-to-many relationships between types. This scheme would automatically partition Freebase in a reasonable way and provide an efficient representation where many obvious questions (ex. “Find Female Composers Born In 1963 Who Are More Than 65 inches tall”) can be answered with a minimum number of joins.

Conclusion

Large knowledge bases are becoming available that cover large areas of human concern: we’re finding many applications for them. It’s possible to to handle databases such as Freebase and DBpedia on a single computer of moderate size, however, the size of generic databases and the hardware to store them on are going to grow larger than the ability of a singler computer to process them. Fact stores that (i) use efficient data structures, (ii) take advantage of parallelism, and (iii) can be tuned to the requirements of particular applications, are going to be essential for further progress in the Semantic Web.

Credits

- Metaweb Technologies, Freebase Data Dumps, January 13, 2009

- Kingsley Idehen, for several links about RDF store performance.

- Stewart Butterfield for encyclopedia photo.

The Problem

The Problem

I’ve got an IEnumerable<T> that contains a list of values: I want to know if all of the values in that field are distinct. The function should be easy to use a LINQ extension method and, for bonus points, simply expressed in LINQ itself

One Solution

First, define an extension method

01 public static class IEnumerableExtensions {

02 public static bool AllDistinct<T>(this IEnumerable<T> input) {

03 var count = input.Count();

04 return count == input.Distinct().Count();

05 }

06 }

When you want to test an IEnumerable<T>, just write

07 var isAPotentialPrimaryKey=CandidateColumn.AllDistinct();

Analysis

This solution is simple and probably scales as well as any solution in the worst case. However, it enumerates the IEnumerable<T> twice and does a full scan of the IEnumerable even if non-distinct elements are discovered early in the enumeration. I could certainly make an implementation that aborts early using a Dictionary<T,bool> to store elements we’ve seen and a foreach loop, but I wonder if anyone out there can think of a better pure-Linq solution.

]]> The Problem

The Problem

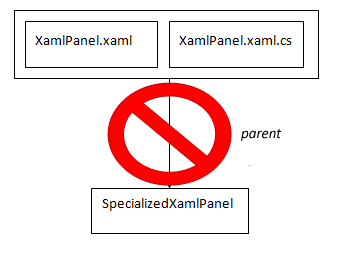

Many Silverlighters use XAML to design the visual appearance of their applications. A UserControl defined with XAML is a DependencyObject that has a complex lifecycle: there’s typically a .xaml file, a .xaml.cs file, and a .xaml.g.cs file that is generated by visual studio. The .xaml.g.cs file is generated by Visual Studio, and ensures that objects defined in the XAML file correspond to fields in the object (so they are seen in intellisense and available to your c# code.) The XAML file is re-read at runtime, and drives a process that instantiates the actual objects defined in the XAML file — a program can compile just fine, but fail during initialization if the XAML file is invalid or if you break any of the assumptions of the system.

XAML is a pretty neat system because it’s not tied to WPF or WPF/E. It can be used to initialize any kind of object: for instance, it can be used to design workflows in asynchronous server applications based on Windows Workflow Foundation.

One problem with XAML, however, is that you cannot write controls that inherit from a UserControl that defined in XAML. Visual Studio might compile the classes for you, but they will fail to initialize at at runtime. This is serious because it makes it impossible to create subclasses that let you make small changes to the appearance or behavior of a control.

Should We Just Give Up?

One approach is to give up on XAML. Although Visual Studio encourages you to create UserControls with XAML, there’s nothing to stop you from creating a new class file and writing something like

class MyUserControl:UserControl {

public MyUserControl {

var innerPanel=new StackPanel();

Content=innerPanel;

innerPanel.Children.Add(new MyFirstVisualElement());

innerPanel.Children.Add(new MySecondVisualElement());

...

}

UserControls defined like this have no (fundamental) problems with inheritance, since you’ve got complete control of the initialization process. If it were up to me, I’d write a lot of controls like this, but I work on a team with people who design controls in XAML, so I needed a better solution.

Or Should We Just Cheat?

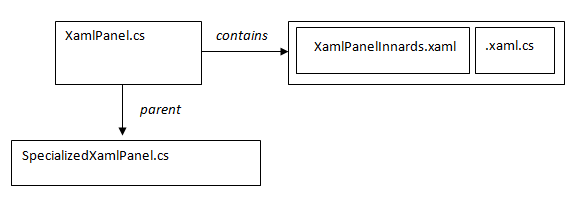

If we still want to use XAML to define the appearance of a control, we’ve got another option. We can move the XAML into a control that is outside the inheritance hierarchy: the XAML control is then contained inside another control which doesn’t have restrictions as to how it is used:

The XamlPanelInnards.xaml.cs code-behind is almost completely empty: it contains only the constructor created by Visual Studio’s XAML designer. XamlPanel.cs contains a protected member called InnerControl which is of type XamlPanelInnards. InnerControl is initialized in the constructor of XamlPanel, and is also assigned to the Content property of the XamlPanel, to make it visible. Everything that you’d ordinarily put in the code-behind XamlPanelInnards.xaml.cs goes into XamlPanel.cs, which uses the InnerControl member to get access to the internal members defined by the XAML designer.

Step-by-Step Refactoring

Let’s imagine that we’ve got an existing UserControl implemented in XAML called the XamlPanel. We’d like to subclass the XamlPanel so we can use it for multiple purposes. We can do this by:

- Renaming XamlPanel to OldXamlPanel; this renames both the *.xaml and *.xaml.cs files so we can have them to look at

- Use Visual Studio to create a new “Silverlight User Control” called XamlPanelInnards. This will have both a *.xaml and *.xaml.xs file

- Copy the contents of OldXamlPanel.xaml to XamlPanelInnards.cs. Edit the x:Class attribute of the <UserControl> element to reflect the new class name, “XamlPanelInnards”

- Use Visual Studio to create a new class, called XamlPanel.cs. Do not create a XamlPanel.xaml.cs file!

- Copy the constructor, methods, fields and properties from the OldXamlPanel.xaml.cs file to the XamlPanel.cs file.

- Create a new private field in XamlPanel.cs like

private XamlPanelInnards InnerControl;

- Now we modify the constructor, so that it does something like this:

public XamlPanel() { InnerControl = new XamlPanelInnards(); Content = InnerControl; ... remainder of the constructor ... } - Most likely you’ll have compilation errors in the XamlPanel.cs file because there are a lot of references to public fields that are now in the InnerControl. You need to track these down, and replace code that looks like

TitleTextBlock.Text="Some Title";

with

InnerControl.TitleTextBlock.Text="SomeTitle";

- Any event handler attachments done from the XAML file will fail to work (they’ll trigger an error when you load the application.) You’ll need to convert

<Button x:PressMe ... Click="PressMe_OnClick">

in the XAML file to

InnerControl.PressMe.Click += PressMe_OnClick

in the constructor of XamlPanel.

- UI Elements that are defined in XAML are public, so there’s a good chance that other classes might expect UI Elements inside the XamlPanel to be accessable. You’ve got some choices: (a) make the InnerControl public and point those references to the InnerControl (yuck!), (b) selectively add properties to XamlPanel to let outside classes access the elements that they need to access, or (c) rethink the encapsulation so that other class don’t need direct access to the members of XamlPanelInnards.

- There are a few special methods that are defined in DependencyObject and FrameworkElement that you’ll need to pay attention to. For instance, if your class uses FindName to look up elements dynamically, you need to replace

FindName("Control"+controlNumber);with

InnerControl.FindName("Control"+controlNumber);

So far this is a refactoring operation: we’re left with a program that does what it already did, but is organized differently. Where can we go from here?

Extending The XamlPanel

At this point, the XamlPanel is an ordinary UserControl class. It’s initialization logic is self-sufficient, so it can inherit (it doesn’t necessarily have to derive from UserControl) and be inherited from quite freely.

If we want to change the behavior of the XamlPanel, for instance, we could declare it abstract and leave certain methods (such as event handlers) abstract. Alternately, methods could be declared as virtual.

A number of methods exist to customize the appearance of XamlPanel: since XamlPanel can see the objects inside XamlPanelInnards, it can change colors, image sources and text contents. If you’re interested in adding additional graphical element to the Innards, the innards can contain an empty StackPanel — children of XamlPanel can Add() something to the StackPanel in their constructors.

Note that you can still include a child XamlPanel inside a control defined in XAML by writing something like

<OURNAMESPACE:SecondXamlPanel x:Name="MyInstance">

you’re free to make XamlPanel and it’s children configurable via the DependencyObject mechanisms. The one thing that you lose is public access to the graphical element inside the StackPanelInnards: many developers would think that this increase in encapsulation is a good thing, but it may involve a change in the way you do things.

Related articles

The community at silverlight.net has pointed me to a few other articles about XAML, inheritance and configuring Custom XAML controls.

In a lavishly illustrated blog entry, Amyo Kabir explains how to make a XAML-defined control inherit from a user-defined base class. It’s the converse of what I’m doing in this article, but it’s also a powerful technique: I’m using it right now to implement a number of Steps in a Wizard.

Note that Silverlight has mechanisms for creating Custom Controls: these are controls that use the DependencyObject mechanism to be configurable via XAML files that include them. If you’re interested in customizing individual controls rather than customizing subclasses, this is an option worth exploring.

Conclusion

XAML is a great system for configuring complex objects, but you can’t inherit from a Silverlight class defined in XAML. By using a containment relation instead of an inheritance relation, we can push the XAML-configured class outside of our inheritance hierarchy, allowing the container class to participate as we desire. This way we can have both visual UI generation and the software engineering benefits of inheritance.

]]>

Introduction

Lately I’ve been working on a web application based on Silverlight 2. The application uses a traditional web login system based on a cryptographically signed cookie. In early development, users logged in on an HTML page, which would load a Silverlight application on successful login. Users who didn’t have Silverlight installed would be asked to install it after logging in, rather than before.

Although it’s (sometimes) possible to determine what plug-ins a user has installed using Javascript, the methods are dependent on the specific browser and the plug-ins. We went for a simple and effective method: make the login form a Silverlight application, so that users would be prompted to install Silverlight before logging in.

Our solutionn was to make the Silverlight application a drop-in replacement for the original HTML form. The Silverlight application controls a hidden HTML form: when a user hits the “Log In” buttonin the Silverlight application, the application inserts the appropriate information into the HTML form and submits it. This article describes the technique in detail.

The HTML Form

The HTML form is straightforward — the main thing that’s funky about it is that all of the controls are invisible, since we don’t want users to see them or interact with them directly:

[01] <form id="loginForm" method="post" action=""> [02] <div class="loginError" id="loginError"> [03] <%= Server.HtmlEncode(errorCondition) %> [04] </div> [05] <input type="hidden" name="username" id="usernameField" [06] value="<%= Server.HtmlEncode(username) %>" /> [07] <input type="hidden" name="password" id="passwordField" /> [08] </form>

The CSS file for the form contains the following directive:

[09] .loginError { display:none }

to prevent the error message from being visible on the HTML page.

Executing Javascript In Silverlight 2

A Silverlight 2 application can execute Javascript using the HtmlPage.Window.Eval() method; HtmlPage.Window is static, so you can use it anywhere, so long as you’re running in the UI thread. Our simple application doesn’t do communication and doesn’t launch new threads, so we don’t need to worry about threads. We add a simple wrapper method to help the rest of the code flow off the tips of our fingers

[10] using System.Windows.Browser

[11] ...

[12] namespace MyApplication {

[13] public partial class Page : UserControl {

[14] ...

[15] Object JsEval(string jsCode) {

[16] return HtmlPage.Window.Eval(jsCode);

[17] }

Note that Visual Studio doesn’t put the using in by default, so you’ll need to add it. The application is really simple, with just two event handlers and a little XAML to define the interface, so it’s all in a single class, the Page.xaml and Page.xaml.cs files created when I made the project in VIsual Studio.

Note that JsEval returns an Object, so you can look at the return value of a javascript evaluation. Numbers are returned as doubles and strings are returned as strings, which can be quite useful. References to HTML elements are returned as instances of the HtmlElement class. HtmlElement has some useful methods, such as GetAttribute() and SetAttribute(), but I’ve found that I get more reliable results by writing snippets of Javascript code.

Finding HTML Elements

One practical is problem is how to find the HTML Elements on the page that you’d like to work with. I’ve been spoiled by the $() function in Prototype and JQuery, so I like to access HTML elements by id. There isn’t a standard method to do this in all browser, so I slipped the following snippet of Javascript into the document:

[18] function returnObjById(id) {

[19] if (document.getElementById)

[20] var returnVar = document.getElementById(id);

[21] else if (document.all)

[22] var returnVar = document.all[id];

[23] else if (document.layers)

[24] var returnVar = document.layers[id];

[25] return returnVar;

[26] }

(code snippet courtesy of NetLobo)

I wrote a few convenience methods in C# inside my Page class:

[27] String IdFetchScript(string elementId) {

[28] return String.Format("returnObjById('{0}')", elementId);

[29] }

[30]

[31] HtmlElement FetchHtmlElement(string elementId) {

[32] return (HtmlElement)JsEval(IdFetchScript(elementId));

[33] }

Manipulating HTML Elements

At this point you can do anything that can be done in Javascript. That said, all I need is a few methods to get information in and out of the form:

[34] string GetTextInElement(string elementId) {

[35] HtmlElement e = FetchHtmlElement(elementId);

[36] if (e == null)

[37] return "";

[38]

[39] return (string)JsEval(IdFetchScript(elementId) + ".innerHTML");

[40] }

[41]

[42] void Set(string fieldId, string value) {

[43] HtmlElement e = FetchHtmlElement(fieldId);

[44] e.SetAttribute("value", value);

[45] }

[46]

[47] string GetFormFieldValue(string fieldId) {

[48] return (string) JsEval(IdFetchScript(fieldId)+".value");

[49] }

[50]

[51] void SubmitForm(string formId) {

[52] JsEval(IdFetchScript(formId) + ".submit()");

[53] }

Isolating the Javascript into a set of helper methods helps make the code maintainable: these methods are usable by a C#er who isn’t a Javascript expert — if we discover problems with cross-browser compatibility, we can fix them in a single place.

Putting it all together

A little bit of code in the constructor loads form information into the application:

[54] public Page() {

[55] ...

[56] LoginButton.Click += LoginButton_Click;

[57] UsernameInput.Text = GetFormFieldValue("usernameField");

[58] ErrorMessage.Text = GetTextInElement("loginError");

[59] }

The LoginButton_Click event handler populates the invisible HTML form and submits it:

[60] void LoginButton_Click(object sender, RoutedEventArgs e) {

[61] SetFormFieldValue("usernameField", UsernameInput.Text);

[62] SetFormFieldValue("passwordField", PasswordInput.Password);

[63] SubmitForm("loginForm");

[64] }

Conclusion

Although Silverlight 2 is capable of direct http communication with a web server, sometimes it’s convenient for a Silverlight application to directly manipulate HTML forms. This article presents sample source code that simplifies that task.

P.S. A commenter on the Silverlight.net forums pointed me to another article by Tim Heuer that describes alternate methods for manipulating the DOM and calling Javascript functions.

(Thanks skippy for the remote control image.)

]]>I program in PHP a lot, but I’ve avoided using autoloaders, except when I’ve been working in frameworks, such as symfony, that include an autoloader. Last month I started working on a system that’s designed to be part of a software product line: many scripts, for instance, are going to need to deserialize objects that didn’t exist when the script was written: autoloading went from a convenience to a necessity.

The majority of autoloaders use a fixed mapping between class names and PHP file names. Although that’s fine if you obey a strict “one class, one file” policy, that’s a policy that I don’t follow 100% of the time. An additional problem is that today’s PHP applications often reuse code from multiple frameworks and libraries that use different naming conventions: often applications end up registering multiple autoloaders. I was looking for an autoloader that “just works” with a minimum of convention and configuration — and I found that in a recent autoloader developed by A.J. Brown.

After presenting the way that I integrated Brown’s autoloader into my in-house frameowrk, this article considering the growing controversy over require(), require_once() and autoloading performance: to make a long story short, people are experiencing very different results in different environments, and the growing popularity of autoloading is going to lead to changes in PHP and the ecosystem around it.

History: PHP 4 And The Bad Old Days

In the past, PHP programmers have included class definitions in their programs with the following four built-in functions:

- include

- require

- include_once

- require_once

The difference between the include and require functions is that execution of a program will continue if a call to include fails and will result in an error if a call to require fails. require_ and require_once are reccomended for general use, since you’d probably rather have an application fail if libraries are missing rather than barrel on with unpredictable results. (Particularly if the missing library is responsible for authentication.)

If you write

01 require "filename.php";

PHP will scan the PHP include_path for a directory that contains a file called “filename.php”; it then executes the content of “filename.php” right where the function is called. You can do some pretty funky things this way, for instance, you can write

02 for (int i=0;i<10;$i++) {

03 require "template-$i.php";

04 }

to cause the sequential execution of a namset of PHP files named “template-0.php” through “template-9.php.” A required file has access to local variables in the scope that require is called, so require is particularly useful for web templating and situations that require dynamic dispatch (when you compute the filename.) A cool, but slightly obscure feature, is that an included file can return a value. If an source file, say “compute-value.php” uses the return statement,

05 return $some_value;

the value $some_value will be return by require:

06 $value=require "compute-value.php";

These features can be used to create an MVC-like framework where views and controllers are implemented as source files rather than objects.

require isn’t so appropriate, however, when you’re requiring a file that contains class definitions. Imagine we have a hiearchy of classes like Entity -> Picture -> PictureOfACar,PictureOfAnAnimal. It’s quite tempting for PictureofACar.php and PictureofAnAnimal.php to both

07 require "Picture.php";

this works fine if an application only uses PictureOfACar.php and requires it once by writing

08 require "PictureOfACar.php";

It fails, however, if an application requires both PictureOfACar and PictureOfAnAnimal since PHP only allows a class to be defined once.

require_once neatly solves this problem by keeping a list of files that have been required and doing nothing if a file has already been required. You can use require_once in any place where you’d like to guarantee that a class is available, and expect that things “just work”

__autoload

Well, things don’t always “just work”. Although large PHP applications can be maintained with require_once, it becomes an increasing burden to keep track of require files as applications get larger. require_once also breaks down once frameworks start to dynamically instantiate classes that are specified in configuration files. The developers of PHP found a creative solution in the __autoload function, a “magic” function that you can define. __autoload($class_name) gets called whenever a PHP references an undefined class. A very simple __autoload implementation can work well: for instance, the PHP manual page for __autoload has the following code snippet:

09 function _autoload($class_name) {

10 require_once $class_name . '.php';

11 }

If you write

12 $instance=new MyUndefinedClass();

this autoloader will search the PHP include path for “MyUndefinedClass.php.” (A real autoloader should be a little more complex than this: the above autoloader could be persuaded to load from an unsafe filename if user input is used to instantiate a class dynamically, i.e.

13 $instance=new $derived_class_name();

Static autoloading and autoloader proliferation

Unlike Java, PHP does not have a standard to relate source file names with class names. Some PHP developers imitate the Java convention to define one file per class and name their files something like

ClassName.php, or

ClassName.class.php

A typical project that uses code from several sources will probably have sections that are written with different conventions For instance, the Zend framework turns “_” into “/” when it creates paths, so the definition of “Zend_Loader” would be found underneath “Zend/Loader.php.”

A single autoloader could try a few different conventions, but the answer that’s become most widespread is for each PHP framework or library to contain it’s own autoloader. PHP 5.2 introduced the spl_register_autoload() function to replace __autoload(). spl_register_autoload() allows us to register multiple autoloaders, instead of just one. This is ugly, but it works.

One Class Per File?

A final critique of static autoloading is that it’s not universally held that “one class one file” is the best practice for PHP development. One of the advantages of OO scripting languages such as PHP and Python is that you can start with a simple procedural script and gradually evolve it into an OO program by gradual refactoring. A convention that requires to developers to create a new file for each class tends to:

- Discourage developers from creating classes

- Discourage developers from renaming classes

- Discourage developers from deleting classes

These can cumulatively lead programmers to make decisions based on what’s convenient to do with their development tools, not based on what’s good for the software in the long term. These considerations need to be balanced against:

- The ease of finding classes when they are organized “once class per file”,

- The difficulty of navigating huge source code files that contain a large number of classes, and

- Toolset simplification and support.

The last of these is particularly important when we compare PHP with Java. Since the Java compiler enforces a particular convention, that convention is supported by Java IDE’s. The problems that I mention above are greatly reduced if you use an IDE such as Eclipse, which is smart enough to rename files when you rename a class. PHP developers don’t benefit from IDEs that are so advanced — it’s much more difficult for IDE’s to understand a dynamic language. Java also supports inner classes, which allow captive classes (that are only accessed from within an enclosing class) to be defined inside the same file as the enclosing class. Forcing captive classes to be defined in separate files can cause a bewildering number of files to appear, which, in turn, can discourage developers from using captive classes — and that can lead to big mistakes.

Dynamic Autoloading

A. J. Brown has developed an autoloader that uses PHP’s tokenizer() to search a directory full of PHP files, search the files for classes, and create a mapping from class names to php source files. tokenizer() is a remarkable metaprogramming facility that makes it easy to write PHP programs that interpret PHP source. In 298 lines of code, Brown defines three classes. To make his autoloader fit into my in-house framework, I copied his classes into two files:

- lib/nails_core/autoloader.php: ClassFileMap, ClassFileMapAutoloader

- lib/nails_core/autoloader_initialize.php: ClassFileMapFactory

I’m concerned about the overhead of repeatedly traversing PHP library directories and parsing the files, so I run the following program to create the ClassFileMap, serialize it, and store it in a file:

bin/create_class_map.php:

14 <?php

15

16 $SUPRESS_AUTOLOAD=true;

17 require_once(dirname(__FILE__)."/../_config.php");

18 require_once "nails_core/autoloader_initialize.php";

19 $lib_class_map = ClassFileMapFactory::generate($APP_BASE."/lib");

20 $_autoloader = new ClassFileMapAutoloader();

21 $_autoloader->addClassFileMap($lib_class_map);

22 $data=serialize($_autoloader);

23 file_put_contents("$APP_BASE/var/classmap.ser",$data);

Note that I’m serializing the ClassFileMapAutoloader rather than the ClassFileMap, since I’d like to have the option of specifying more than one search directory. To follow the “convention over configuration” philosophy, a future version will probable traverse all of the directories in the php_include_path.

All of the PHP pages, controllers and command-line scripts in my framework have the line

24 require_once(dirname(__FILE__)."/../_config.php");

which includes a file that is responsible for configuring the application and the PHP environment. I added a bit of code to the _config.php to support the autoloader:

_config.php:

25 <?php

26 $APP_BASE = "/where/app/is/in/the/filesystem";

...

27 if (!isset($SUPPRESS_AUTOLOADER)) {

28 require_once "nails_core/autoloader.php";

29 $_autoloader=unserialize(file_get_contents($APP_BASE."/var/classmap.ser"));

30 $_autoloader->registerAutoload();

31 };

Pretty simple.

Autoloading And Performance

Although there’s plenty of controversy about issues of software maintainability, I’ve learned the hard way that it’s hard to make blanket statements about performance — results can differ based on your workload and the exact environment you’re working in. Although Brown makes the statement that “We do add a slight overhead to the application,” many programmers are discovering that autoloading improves performance over require_once:

Zend_Loader Performance Analysis

Autoloading Classes To Reduce CPU Usage

There seem to be two issues here: first of all, most systems that use require_once are going to err on the side of including more files than they need rather than fewer — it’s better to make a system slower and bloated than to make it incorrect. A system that uses autoloading will spend less time loading classes, and, just as important, less memory storing them. Second, PHP programmers appear to be experience variable results with require() and require_once():

Wikia Developer Finds require_once() Slower Than require()

Another Developer Finds Little Difference

Yet Another Developer Finds It Depends On His Cache Configuration

Rumor has it, PHP 5.3 improves require_once() performance

One major issues is that require_once() calls the realpath() C function, which in turn calls the lstat() system call. The cost of system calls can vary quite radically on different operating systems and even different filesystems. The use of an opcode cache such as XCache or APC can also change the situation.

It appears that current opcode caches (as of Jan 2008) don’t efficiently support autoloading:

Mike Willbanks Experience Slowdown With Zend_Loader

APC Developer States That Autoloading is Incompatible With Cacheing

Rambling Discussion of the state of autoloading with XCache

the issue is that they don’t, at compile time, know what files are going be required by the application. Opcode caches also reduce the overhead of loading superfluous classes, so they don’t get the benefits experienced with plain PHP.

It all reminds me of the situation with synchronized in Java. In early implementations of Java, synchronized method calls had an execution time nearly ten times longer than ordinary message calls. Many developers designed systems (such as the Swing windowing toolkit) around this performance problem. Modern VM’s have greatly accelerated the synchronization mechanism and can often optimize superfluous synchronizations away — so the performance advice of a decade ago is bunk.

Language such as Java are able to treat individual classes as compilation units: and I’d imagine that, with certain restrictions, a PHP bytecode cache should be able to do just that. This may involve some changes in the implementation of PHP.

Conclusion

Autoloading is an increasingly popular practice among PHP developers. Autoloading improves development productivity in two ways:

- It frees developers from thinking about loading the source files needed by an application, and

- It enables dynamic dispatch, situations where a script doesn’t know about all the classes it will interact with when it’s written

Since PHP allows developers to create their own autoloaders, a number of autoloaders exist. Many frameworks, such as the Zend Framework, symfony, and CodeIgniter, come with autoloaders — as a result, some PHP applications might contain more than one autoloader. Most autoloaders require that classes be stored in files with specific names, but Brown’s autoloader can scan directory trees to automatically locate PHP classes and map them to filenames. Eliminating the need for both convention and configuration, I think it’s a significant advance: in many cases I think it could replace the proliferation of autoloaders that we’re seeing today.

You’ll hear very different stories about the performance of autoload, require_once() and other class loading mechanisms from different people. The precise workload, operating system, PHP version, and the use of an opcode cache appear to be important factors. Widespread use of autoloading will probably result in optimization of autoloading throughout the PHP ecosystem.

]]>This article is a follow up to “Don’t Catch Exceptions“, which advocates that exceptions should (in general) be passed up to a “unit of work”, that is, a fairly coarse-grained activity which can reasonably be failed, retried or ignored. A unit of work could be:

- an entire program, for a command-line script,

- a single web request in a web application,

- the delivery of an e-mail message

- the handling of a single input record in a batch loading application,

- rendering a single frame in a media player or a video game, or

- an event handler in a GUI program

The code around the unit of work may look something like

[01] try {

[02] DoUnitOfWork()

[03] } catch(Exception e) {

[04] ... examine exception and decide what to do ...

[05] }

For the most part, the code inside DoUnitOfWork() and the functions it calls tries to throw exceptions upward rather than catch them.

To handle errors correctly, you need to answer a few questions, such as

- Was this error caused by a corrupted application state?

- Did this error cause the application state to be corrupted?

- Was this error caused by invalid input?

- What do we tell the user, the developers and the system administrator?

- Could this operation succeed if it was retried?

- Is there something else we could do?

Although it’s good to depend on existing exception hierarchies (at least you won’t introduce new problems), the way that exceptions are defined and thrown inside the work unit should help the code on line [04] make a decision about what to do — such practices are the subject of a future article, which subscribers to our RSS feed will be the first to read.

The cause and effect of errors

There are a certain range of error conditions that are predictable, where it’s possible to detect the error and implement the correct response. As an application becomes more complex, the number of possible errors explodes, and it becomes impossible or unacceptably expensive to implement explicit handling of every condition.

What do do about unanticipated errors is a controversial topic. Two extreme positions are: (i) an unexpected error could be a sign that the application is corrupted, so that the application should be shut down, and (ii) systems should bend but not break: we should be optimistic and hope for the best. Ultimately, there’s a contradiction between integrity and availability, and different systems make different choices. The ecosystem around Microsoft Windows, where people predominantly develop desktop applications, is inclined to give up the ghost when things go wrong — better to show a “blue screen of death” than to let the unpredictable happen. In the Unix ecosystem, more centered around server applications and custom scripts, the tendency is to soldier on in the face of adversity.

What’s at stake?

Desktop applications tend to fail when unexpected errors happen: users learn to save frequently. Some of the best applications, such as GNU emacs and Microsoft Word, keep a running log of changes to minimize work lost to application and system crashes. Users accept the situation.

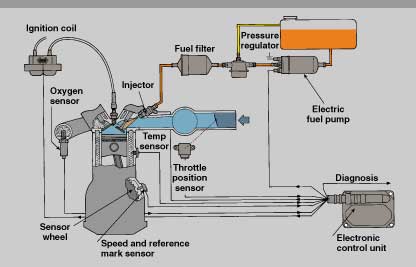

On the other hand, it’s unreasonable for a server application that serves hundreds or millions of users to shut down on account of a cosmic ray. Embedded systems, in particular, function in a world where failure is frequent and the effects must be minimized. As we’ll see later, it would be a real bummer if the Engine Control Unit in your car left you stranded home because your oxygen sensor quit working.

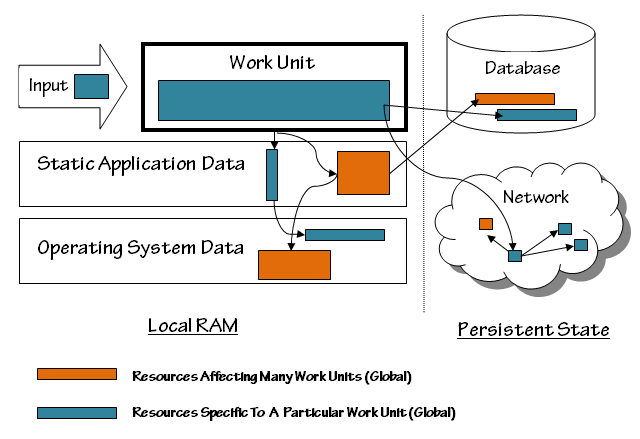

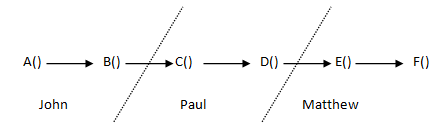

The following diagram illustrates the environment of a work unit in a typical application: (although this application accesses network resources, we’re not thinking of it as a distributed application. We’re responsible for the correct behavior of the application running in a single address space, not about the correct behavior of a process swarm.)

The Input to the work unit is a potential source of trouble. The input could be invalid, or it could trigger a bug in the work unit or elsewhere in the system (the “system” encompasses everything in the diagram) Even if the input is valid, it could contain a reference to a corrupted resource, elsewhere in the system. A corrupted resource could be a damaged data structure (such as a colored box in a database), or an otherwise malfunctioning part of the system (a crashed server or router on the network.)

Data structures in the work unit itself are the least problematic, for purposes of error handling, because they don’t outlive the work unit and don’t have any impact on future work units.

Static application data, on the other hand, persists after the work unit ends, and this has two possible consequences:

- The current work unit can fail because a previous work unit caused a resource to be corrupted, and

- The current work unit can corrupt a resource, causing a future work unit to fail

Osterman’s argument that applications should crash on errors is based on this reality: an unanticipated failure is a sign that the application is in an unknown (and possibly bad) state, and can’t be trusted to be reliable in the future. Stopping the application and restarting it clears out the static state, eliminating resource corruption.

Rebooting the application, however, might not free up corrupted resources inside the operating system. Both desktop and server applications suffer from operating system errors from time to time, and often can get immediate relief by rebooting the whole computer.

The “reboot” strategy runs out of steam when we cross the line from in-RAM state to persistent state, state that’s stored on disks, or stored elsewhere on the network. Once resources in the persistent world are corrupted, they need to be (i) lived with, or repaired by (ii) manual or (iii) automatic action.

In either world, a corrupted resource can have either a narrow (blue) or wide (orange) effect on the application. For instance, the user account record of an individual user could be damaged, which prevents that user from logging in. That’s bad, but it would hardly be catastrophic for a system that has 100,000 users. It’s best to ‘ignore’ this error, because a system-wide ‘abort’ would deny service to 99,999 other users; the problem can be corrected when the user complains, or when the problem is otherwise detected by the system administrator.

If, on the other hand, the cryptographic signing key that controls the authentication process were lost, nobody would be able to log in: that’s quite a problem. It’s kind of the problem that will be noticed, however, so aborting at the work unit level (authenticated request) is enough to protect the integrity of the system while the administrators repair the problem.

Problems can happen at an intermediate scope as well. For instance, if the system has damage to a message file for Italian users, people who use the system in the Italian language could be locked out. If Italian speakers are 10% of the users, it’s best to keep the system running for others while you correct the problem.

Repair

There are several tools for dealing with corruption in persistent data stores. In a one-of-a-kind business system, a DBA may need to intervene occasionally to repair corruption. More common events can be handled by running scripts which detect and repair corruption, much like the fsck command in Unix or the chkdsk command in Windows. Corruption in the metadata of a filesystem can, potentially, cause a sequence of events which leads to massive data loss, so UNIX systems have historically run the fsck command on filesystems whenever the filesystem is in a questionable state (such as after a system crash or power failure.) The time do do an fsck has become an increasing burden as disks have gotten larger, so modern UNIX systems use journaling filesystems that protect filesystem metadata with transactional semantics.

Release and Rollback

One role of an exception handler for a unit of work is to take steps to prevent corruption. This involves the release of resources, putting data in a safe state, and, when possible, the rollback of transactions.

Although many kinds of persistent store support transactions, and many in-memory data structures can support transactions, the most common transactional store that people use is the relational database. Although transactions don’t protect the database from all programming errors, they can ensure that neither expected or unexpected exceptions will cause partially-completed work to remain in the database.

A classic example in pseudo code is the following:

[06] function TransferMoney(fromAccount,toAccount,amount) {

[07] try {

[08] BeginTransaction();

[09] ChangeBalance(toAccount,amount);

[10] ... something throws exception here ...

[11] ChangeBalance(fromAccount,-amount);

[12] CommitTransaction();

[13] } catch(Exception e) {

[14] RollbackTransaction();

[15] }

[16] }

In this (simplified) example, we’re transferring money from one bank account to another. Potentially an exception thrown at line [05] could be serious, since it would cause money to appear in toAccount without it being removed from fromAccount. It’s bad enough if this happens by accident, but a clever cracker who finds a way to cause an exception at line [05] has discovered a way to steal money from the bank.

Fortunately we’re doing this financial transaction inside a database transaction. Everything done after BeginTransaction() is provisional: it doesn’t actually appear in the database until CommitTransaction() is called. When an exception happens, we call RollbackTransaction(), which makes it as if the first ChangeBalance() had never been called.

As mentioned in the “Don’t Catch Exceptions” article, it often makes sense to do release, rollback and repairing operations in a finally clause rather than the unit-of-work catch clause because it lets an individual subsystem take care of itself — this promotes encapsulation. However, in applications that use databases transactionally, it often makes sense to push transaction management out the the work unit.

Why? Complex database operations are often composed out of simpler database operations that, themselves, should be done transactionally. To take an example, imagine that somebody is opening a new account and funding it from an existing account:

[17] function OpenAndFundNewAccount(accountInformation,oldAccount,amount) {

[18] if (amount<MinimumAmount) {

[19] throw new InvalidInputException(

[20] "Attempted To Create Account With Balance Below Minimum"

[21] );

[22] }

[23] newAccount=CreateNewAccountRecords(accountInformation);

[24] TransferMoney(oldAccount,newAccount,amount);|

[25] }

It’s important that the TransferMoney operation be done transactionally, but it’s also important that the whole OpenAndFundNewAccount operation be done transactionally too, because we don’t want an account in the system to start with a zero balance.

A straightforward answer to this problem is to always do banking operations inside a unit of work, and to begin, commit and roll back transactions at the work unit level:

[26] AtmOutput ProcessAtmRequest(AtmInput in) {

[27] try {

[28] BeginTransaction();

[29] BankingOperation op=AtmInput.ParseOperation();

[30] var out=op.Execute();

[31] var atmOut=AtmOutput.Encode(out);

[32] CommitTransaction();

[33] return atmOut;

[34] }

[35] catch(Exception e) {

[36] RollbackTransaction();