Freebase is a open database of things that exist in the world: things like people, places, songs and television shows. As of the January 2009 dump, Freebase contained about 241 million facts, and it’s growing all the time. You can browse it via the web and even edit it, much like Wikipedia. Freebase also has an API that lets programs add data and make queries using a language called MQL. Freebase is complementary to DBpedia and other sources of information. Although it takes a different approach to the semantic web than systems based on RDF standards, it interoperates with them via linked data.

The January 2009 Freebase dump is about 500 MB in size. Inside a bzip-compressed files, you’ll find something that’s similar in spirit to a Turtle RDF file, but is in a simpler format and represents facts as a collection of four values rather than just three.

Your Own Personal Freebase

To start exploring and extracting from Freebase, I wanted to load the database into a star schema in a mysql database — an architecture similar to some RDF stores, such as ARC. The project took about a week of time on a modern x86 server with 4 cores and 4 GB of RAM and resulted in a 18 GB collection of database files and indexes.

This is sufficient for my immediate purposes, but future versions of Freebase promise to be much larger: this article examines the means that could be used to improve performance and scalability using parallelism as well as improved data structures and algorithms.

I’m interested in using generic databases such as Freebase and Dbpedia as a data source for building web sites. It’s possible to access generic databases through APIs, but there are advantages to having your own copy: you don’t need to worry about API limits and network latency, and you can ask questions that cover the entire universe of discourse.

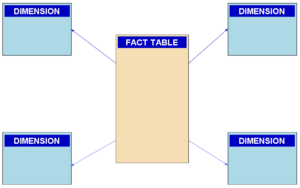

Many RDF stores use variations of a format known as a Star Schema for representing RDF triples; the Star Schema is commonly used in data warehousing application because it can efficiently represent repetitive data. Freebase is similar to, but not quite an RDF system. Although there are multiple ways of thinking about Freebase, the quarterly dump files provided by Metaweb are presented as quads: groups of four related terms in tab-delimited terms. To have a platform for exploring freebase, I began a project of loading Freebase into a Star Schema in a relational database.

A Disclaimer

Timings reported in this article are approximate. This work was done on a server that was doing other things; little effort was made to control sources of variation such as foreign workload, software configuration and hardware characteristics. I think it’s orders of magnitude that matter here: with much larger data sets becoming available, we need tools that can handle databases 10-100 times as big, and quibbling about 20% here or there isn’t so important. I’ve gotten similar results with the ARC triple store. Some products do about an order of magnitude better: the Virtuoso server can load DBpedia, a larger database, in about 16 to 22 hours on a 16 GB computer: several papers on RDF store performance are available [1] [2] [3]. Although the system described in this paper isn’t quite an RDF store, it’s performance is comprable to a relatively untuned RDF store.

It took about a week of calendar time to load the 241 million quads in the January 2009 Freebase into a Star Schema using a modern 4-core web server with 4GB of RAM; this time could certainly be improved with microoptimizations, but it’s in the same range that people are observing that it takes to load 10^8 triples into other RDF stores. (One product is claimed to load DBPedia, which contains about 100 million triples, in about 16 hours with “heavy-duty hardware”.) Data sets exceeding 10^9 triples are becoming rapidly available — these will soon exceed what can be handled with simple hardware and software and will require new techniques: both the use of parallel computers and optimized data structures.

The Star Schema

In a star schema, data is represented in separate fact and dimension tables,

all of the rows in the fact table (quad) contain integer keys — the values associated with the keys are defined in dimension tables (cN_value). This efficiently compresses the data and indexes for the fact table, particularly when the values are highly repetitive.

I loaded data into the following schema:

create table c1_value ( id integer primary key auto_increment, value text, key(value(255)) ) type=myisam; ... identical c2_value, c3_value and c4_value tables ... create table quad ( id integer primary key auto_increment, c1 integer not null, c2 integer not null, c3 integer not null, c4 integer not null ) type=myisam;

Although I later created indexes on c1, c2, c3, and c4 in the quad table, I left unnecessary indexes off of the tables during the loading process because it’s more efficient to create indexes after loading data in a table. The keys on the value fields of the dimension tables are important, because the loading process does frequent queries to see if values already exist in the dimension table. The sequentially assigned id in the quad field isn’t necessary for many applications, but it gives each a fact a unique identity and makes the system aware of the order of facts in the dump file.

The Loading Process

The loading script was written in PHP and used a naive method to build the index incrementally. In pseudo code it looked something like this:

function insert_quad($q1,$q2,$q3,$q4) {

$c1=get_dimension_key(1,$q1);

$c2=get_dimension_key(2,$q2);

$c3=get_dimension_key(3,$q3);

$c4=get_dimension_key(4,$q4);

$conn->insert_row("quad",null,$c1,$c2,$c3,$c4)

}

function get_dimension_key($index,$value) {

$cached_value=check_cache($value);

if ($cached_value)

return $cached_value;

$table="$c{$index}_value";

$row=$conn->fetch_row_by_value($table,$value);

if ($row)

return $row->id;

$conn->insert_row($table,$value);

return $conn->last_insert_id

};

Caching frequently used dimension values improves performance by a factor of five or so, at least in the early stages of loading. A simple cache management algorithm, clearing the cache every 500,000 facts, controls memory use. Timing data shows that a larger cache or better replacement algorithm would make at most an increment improvement in performance. (Unless a complete dimension table index can be held in RAM, in which case all read queries can be eliminated.)

I performed two steps after the initial load:

- Created indexes on quad(c1), quad(c2), quad(c3) and quad(c4)

- Used myisam table compression to reduce database size and improve performance

Loading Performance

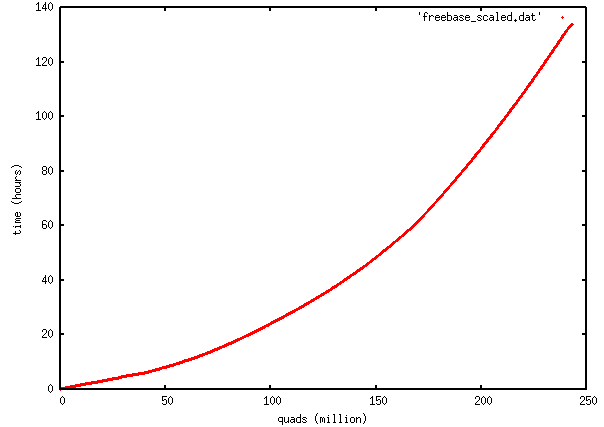

It took about 140 hours (nearly 6 days) to do the initial load. Here’s a graph of facts loaded vs elapsed time:

The important thing Iabout this graph is that it’s convex upward: the loading process slows down as the number of facts increases. The first 50 quads are loaded at a rate of about 6 million per hour; the last 50 are loaded at a rate of about 1 million per hour. An explanation of the details of the curve would be complex, but log N search performance of B-tree indexes and the ability of the database to answer queries out of the computer’s RAM cache would be significant. Generically, all databases will perform the same way, becoming progressively slower as the size of the database increases: you’ll eventually reach a database size where the time to load the database becomes unacceptable.

The process of constructing b-tree indexes on the mysql tables took most of a day. On average it took about four hours to construct a b-tree index on one column of quad:

mysql> create index quad_c4 on quad(c4); Query OK, 243098077 rows affected (3 hours 40 min 50.03 sec) Records: 243098077 Duplicates: 0 Warnings: 0

It took about an hour to compress the tables and rebuild indexes, at which point the data directory looks like:

-rw-r----- 1 mysql root 8588 Feb 22 18:42 c1_value.frm -rw-r----- 1 mysql root 713598307 Feb 22 18:48 c1_value.MYD -rw-r----- 1 mysql root 557990912 Feb 24 10:48 c1_value.MYI -rw-r----- 1 mysql root 8588 Feb 22 18:56 c2_value.frm -rw-r----- 1 mysql root 485254 Feb 22 18:46 c2_value.MYD -rw-r----- 1 mysql root 961536 Feb 24 10:48 c2_value.MYI -rw-r----- 1 mysql root 8588 Feb 22 18:56 c3_value.frm -rw-r----- 1 mysql root 472636380 Feb 22 18:51 c3_value.MYD -rw-r----- 1 mysql root 370497536 Feb 24 10:51 c3_value.MYI -rw-r----- 1 mysql root 8588 Feb 22 18:56 c4_value.frm -rw-r----- 1 mysql root 1365899624 Feb 22 18:44 c4_value.MYD -rw-r----- 1 mysql root 1849223168 Feb 24 11:01 c4_value.MYI -rw-r----- 1 mysql root 65 Feb 22 18:42 db.opt -rw-rw---- 1 mysql mysql 8660 Feb 23 17:16 quad.frm -rw-rw---- 1 mysql mysql 3378855902 Feb 23 20:08 quad.MYD -rw-rw---- 1 mysql mysql 9927788544 Feb 24 11:42 quad.MYI

At this point it’s clear that the indexes are larger than the actual databases: note that c2_value is much smaller than the other tables because it holds a relatively small number of predicate types:

mysql> select count(*) from c2_value; +----------+ | count(*) | +----------+ | 14771 | +----------+ 1 row in set (0.04 sec) mysql> select * from c2_value limit 10; +----+-------------------------------------------------------+ | id | value | +----+-------------------------------------------------------+ | 1 | /type/type/expected_by | | 2 | reverse_of:/community/discussion_thread/topic | | 3 | reverse_of:/freebase/user_profile/watched_discussions | | 4 | reverse_of:/freebase/type_hints/included_types | | 5 | /type/object/name | | 6 | /freebase/documented_object/tip | | 7 | /type/type/default_property | | 8 | /type/type/extends | | 9 | /type/type/domain | | 10 | /type/object/type | +----+-------------------------------------------------------+ 10 rows in set (0.00 sec)

The total size of the mysql tablespace comes to about 18GB, anexpansion of about 40 times relative to the bzip2 compressed dump file.

Query Performance

After all of this trouble, how does it perform? Not too bad if we’re asking a simple question, such as pulling up the facts associated with a particular object

mysql> select * from quad where c1=34493; +---------+-------+------+---------+--------+ | id | c1 | c2 | c3 | c4 | +---------+-------+------+---------+--------+ | 2125876 | 34493 | 11 | 69 | 148106 | | 2125877 | 34493 | 12 | 1821399 | 1 | | 2125878 | 34493 | 13 | 1176303 | 148107 | | 2125879 | 34493 | 1577 | 69 | 148108 | | 2125880 | 34493 | 13 | 1176301 | 148109 | | 2125881 | 34493 | 10 | 1713782 | 1 | | 2125882 | 34493 | 5 | 1174826 | 148110 | | 2125883 | 34493 | 1369 | 1826183 | 1 | | 2125884 | 34493 | 1578 | 1826184 | 1 | | 2125885 | 34493 | 5 | 66 | 148110 | | 2125886 | 34493 | 1579 | 1826185 | 1 | +---------+-------+------+---------+--------+ 11 rows in set (0.05 sec)

Certain sorts of aggregate queries are reasonably efficient, if you don’t need to do them too often: we can look up the most common 20 predicates in about a minute:

select

(select value from c2_value as v where v.id=q.c2) as predicate,count(*)

from quad as q

group by c2

order by count(*) desc

limit 20;

+-----------------------------------------+----------+ | predicate | count(*) | +-----------------------------------------+----------+ | /type/object/type | 27911090 | | /type/type/instance | 27911090 | | /type/object/key | 23540311 | | /type/object/timestamp | 19462011 | | /type/object/creator | 19462011 | | /type/permission/controls | 19462010 | | /type/object/name | 14200072 | | master:9202a8c04000641f800000000000012e | 5541319 | | master:9202a8c04000641f800000000000012b | 4732113 | | /music/release/track | 4260825 | | reverse_of:/music/release/track | 4260825 | | /music/track/length | 4104120 | | /music/album/track | 4056938 | | /music/track/album | 4056938 | | /common/document/source_uri | 3411482 | | /common/topic/article | 3369110 | | reverse_of:/common/topic/article | 3369110 | | /type/content/blob_id | 1174046 | | /type/content/media_type | 1174044 | | reverse_of:/type/content/media_type | 1174044 | +-----------------------------------------+----------+ 20 rows in set (43.47 sec)

You’ve got to be careful how you write your queries: the above query with the subselect is efficient, but I found it took 5 hours to run when I joined c2_value with quad and grouped on value. A person who wishes to do frequent aggregate queries would find it most efficient to create a materialized views of the aggregates.

Faster And Large

It’s obvious that the Jan 2009 Freebase is pretty big to handle with the techniques I’m using. One thing I’m sure of is that that Freebase will be much bigger next quarter — I’m not going to do it the same way again. What can I do to speed the process up?

Don’t Screw Up

This kind of process involves a number of lengthy steps. Mistakes, particularly if repeated, can waste days or weeks. Although services such as EC2 are a good way to provision servers to do this kind of work, the use of automation and careful procedures is key to saving time and money.

Partition it

Remember how the loading rate of a data set decreases as the size of the set increase? If I could split the data set into 5 partitions of 50 M quads each, I could increase the loading rate by a factor of 3 or so. If I can build those 5 partitions in parallel (which is trivial), I can reduce wallclock time by a factor of 15.

Eliminate Random Access I/O

This loading process is slow because of the involvement of random access disk I/O. All of Freebase canbe loaded into mysql with the following statement,

LOAD DATA INFILE ‘/tmp/freebase.dat’ INTO TABLE q FIELDS TERMINATED BY ‘\t’;

which took me about 40 minutes to run. Processes that do a “full table scan” on the raw Freebase table with a grep or awk-type pipeline take about 20-30 minutes to complete. Dimension tables can be built quickly if they can be indexed by a RAM hasthable. The process that builds the dimension table can emit a list of key values for the associated quads: this output can be sequentially merged to produce the fact table.

Bottle It

Once a data source has been loaded into a database, a physical copy of the database can be made and copied to another machine. Copies can be made in the fraction of the time that it takes to construct the database. A good example is the Amazon EC2 AMI that contains a preinstalled and preloaded Virtuoso database loaded with billions of triples from DBPedia, MusicBrainz, NeuroCommons and a number of other databases. Although the process of creating the image is complex, a new instance can be provisioned in 1.5 hours at the click of a button.

Compress Data Values

Unique object identifiers in freebase are coded in an inefficient ASCII representation:

mysql> select * from c1_value limit 10; +----+----------------------------------------+ | id | value | +----+----------------------------------------+ | 1 | /guid/9202a8c04000641f800000000000003b | | 2 | /guid/9202a8c04000641f80000000000000ba | | 3 | /guid/9202a8c04000641f8000000000000528 | | 4 | /guid/9202a8c04000641f8000000000000836 | | 5 | /guid/9202a8c04000641f8000000000000df3 | | 6 | /guid/9202a8c04000641f800000000000116f | | 7 | /guid/9202a8c04000641f8000000000001207 | | 8 | /guid/9202a8c04000641f80000000000015f0 | | 9 | /guid/9202a8c04000641f80000000000017dc | | 10 | /guid/9202a8c04000641f80000000000018a2 | +----+----------------------------------------+ 10 rows in set (0.00 sec)

These are 38 bytes apiece. The hexadecimal part of the guid could be represented in 16 bytes in a binary format, and it appears that about half of the guid is a constant prefix that could be further excised.

A similar efficiency can be gained in the construction of in-memory dimension tables: md5 or sha1 hashes could be used as proxies for values.

The freebase dump is littered with “reverse_of:” properties which are superfluous if the correct index structures exist to do forward and backward searches.

Parallelize it

Loading can be parallelized in many ways: for instance, the four dimension tables can be built in parallel. Dimension tables can also be built by a sorting process that can be performed on a computer cluster using map/reduce techniques. A cluster of computers can also store a knowledge base in RAM, trading sequential disk I/O for communication costs. Since the availability of data is going to grow faster than the speed of storage systems, parallelism is going to become essential for handling large knowledge bases — an issue identified by Japanese AI workers in the early 1980′s.

Cube it?

Some queries benefit from indexes built on combinations of tables, such as

CREATE INDEX quad_c1_c2 ON quad(c1,c2);

there are 40 combinations of columns on which an index could be useful — however, the cost in time and storage involved in creating those indexes would be excessively expensive. If such indexes were indeed necessary, a Multidimensional database can create a cube index that is less expensive than a complete set of B-tree indexes.

Break it up into separate tables?

It might be anathema to many semweb enthusiasts, but I think that Freebase (and parts of Freebase) could be efficiently mapped to conventional relational tables. That’s because facts in Freebase are associated with types, see, for instance, Composer from the Music Commons. It seems reasonable to map types to relational tables and to create satellite tables to represent many-to-many relationships between types. This scheme would automatically partition Freebase in a reasonable way and provide an efficient representation where many obvious questions (ex. “Find Female Composers Born In 1963 Who Are More Than 65 inches tall”) can be answered with a minimum number of joins.

Conclusion

Large knowledge bases are becoming available that cover large areas of human concern: we’re finding many applications for them. It’s possible to to handle databases such as Freebase and DBpedia on a single computer of moderate size, however, the size of generic databases and the hardware to store them on are going to grow larger than the ability of a singler computer to process them. Fact stores that (i) use efficient data structures, (ii) take advantage of parallelism, and (iii) can be tuned to the requirements of particular applications, are going to be essential for further progress in the Semantic Web.

Credits

- Metaweb Technologies, Freebase Data Dumps, January 13, 2009

- Kingsley Idehen, for several links about RDF store performance.

- Stewart Butterfield for encyclopedia photo.

This article is a follow up to “Don’t Catch Exceptions“, which advocates that exceptions should (in general) be passed up to a “unit of work”, that is, a fairly coarse-grained activity which can reasonably be failed, retried or ignored. A unit of work could be:

- an entire program, for a command-line script,

- a single web request in a web application,

- the delivery of an e-mail message

- the handling of a single input record in a batch loading application,

- rendering a single frame in a media player or a video game, or

- an event handler in a GUI program

The code around the unit of work may look something like

[01] try {

[02] DoUnitOfWork()

[03] } catch(Exception e) {

[04] ... examine exception and decide what to do ...

[05] }

For the most part, the code inside DoUnitOfWork() and the functions it calls tries to throw exceptions upward rather than catch them.

To handle errors correctly, you need to answer a few questions, such as

- Was this error caused by a corrupted application state?

- Did this error cause the application state to be corrupted?

- Was this error caused by invalid input?

- What do we tell the user, the developers and the system administrator?

- Could this operation succeed if it was retried?

- Is there something else we could do?

Although it’s good to depend on existing exception hierarchies (at least you won’t introduce new problems), the way that exceptions are defined and thrown inside the work unit should help the code on line [04] make a decision about what to do — such practices are the subject of a future article, which subscribers to our RSS feed will be the first to read.

The cause and effect of errors

There are a certain range of error conditions that are predictable, where it’s possible to detect the error and implement the correct response. As an application becomes more complex, the number of possible errors explodes, and it becomes impossible or unacceptably expensive to implement explicit handling of every condition.

What do do about unanticipated errors is a controversial topic. Two extreme positions are: (i) an unexpected error could be a sign that the application is corrupted, so that the application should be shut down, and (ii) systems should bend but not break: we should be optimistic and hope for the best. Ultimately, there’s a contradiction between integrity and availability, and different systems make different choices. The ecosystem around Microsoft Windows, where people predominantly develop desktop applications, is inclined to give up the ghost when things go wrong — better to show a “blue screen of death” than to let the unpredictable happen. In the Unix ecosystem, more centered around server applications and custom scripts, the tendency is to soldier on in the face of adversity.

What’s at stake?

Desktop applications tend to fail when unexpected errors happen: users learn to save frequently. Some of the best applications, such as GNU emacs and Microsoft Word, keep a running log of changes to minimize work lost to application and system crashes. Users accept the situation.

On the other hand, it’s unreasonable for a server application that serves hundreds or millions of users to shut down on account of a cosmic ray. Embedded systems, in particular, function in a world where failure is frequent and the effects must be minimized. As we’ll see later, it would be a real bummer if the Engine Control Unit in your car left you stranded home because your oxygen sensor quit working.

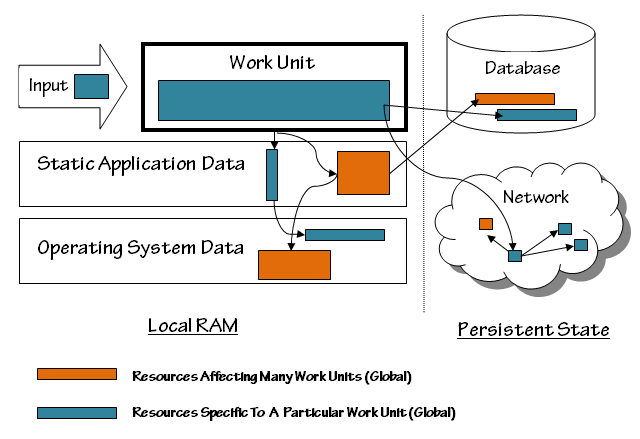

The following diagram illustrates the environment of a work unit in a typical application: (although this application accesses network resources, we’re not thinking of it as a distributed application. We’re responsible for the correct behavior of the application running in a single address space, not about the correct behavior of a process swarm.)

The Input to the work unit is a potential source of trouble. The input could be invalid, or it could trigger a bug in the work unit or elsewhere in the system (the “system” encompasses everything in the diagram) Even if the input is valid, it could contain a reference to a corrupted resource, elsewhere in the system. A corrupted resource could be a damaged data structure (such as a colored box in a database), or an otherwise malfunctioning part of the system (a crashed server or router on the network.)

Data structures in the work unit itself are the least problematic, for purposes of error handling, because they don’t outlive the work unit and don’t have any impact on future work units.

Static application data, on the other hand, persists after the work unit ends, and this has two possible consequences:

- The current work unit can fail because a previous work unit caused a resource to be corrupted, and

- The current work unit can corrupt a resource, causing a future work unit to fail

Osterman’s argument that applications should crash on errors is based on this reality: an unanticipated failure is a sign that the application is in an unknown (and possibly bad) state, and can’t be trusted to be reliable in the future. Stopping the application and restarting it clears out the static state, eliminating resource corruption.

Rebooting the application, however, might not free up corrupted resources inside the operating system. Both desktop and server applications suffer from operating system errors from time to time, and often can get immediate relief by rebooting the whole computer.

The “reboot” strategy runs out of steam when we cross the line from in-RAM state to persistent state, state that’s stored on disks, or stored elsewhere on the network. Once resources in the persistent world are corrupted, they need to be (i) lived with, or repaired by (ii) manual or (iii) automatic action.

In either world, a corrupted resource can have either a narrow (blue) or wide (orange) effect on the application. For instance, the user account record of an individual user could be damaged, which prevents that user from logging in. That’s bad, but it would hardly be catastrophic for a system that has 100,000 users. It’s best to ‘ignore’ this error, because a system-wide ‘abort’ would deny service to 99,999 other users; the problem can be corrected when the user complains, or when the problem is otherwise detected by the system administrator.

If, on the other hand, the cryptographic signing key that controls the authentication process were lost, nobody would be able to log in: that’s quite a problem. It’s kind of the problem that will be noticed, however, so aborting at the work unit level (authenticated request) is enough to protect the integrity of the system while the administrators repair the problem.

Problems can happen at an intermediate scope as well. For instance, if the system has damage to a message file for Italian users, people who use the system in the Italian language could be locked out. If Italian speakers are 10% of the users, it’s best to keep the system running for others while you correct the problem.

Repair

There are several tools for dealing with corruption in persistent data stores. In a one-of-a-kind business system, a DBA may need to intervene occasionally to repair corruption. More common events can be handled by running scripts which detect and repair corruption, much like the fsck command in Unix or the chkdsk command in Windows. Corruption in the metadata of a filesystem can, potentially, cause a sequence of events which leads to massive data loss, so UNIX systems have historically run the fsck command on filesystems whenever the filesystem is in a questionable state (such as after a system crash or power failure.) The time do do an fsck has become an increasing burden as disks have gotten larger, so modern UNIX systems use journaling filesystems that protect filesystem metadata with transactional semantics.

Release and Rollback

One role of an exception handler for a unit of work is to take steps to prevent corruption. This involves the release of resources, putting data in a safe state, and, when possible, the rollback of transactions.

Although many kinds of persistent store support transactions, and many in-memory data structures can support transactions, the most common transactional store that people use is the relational database. Although transactions don’t protect the database from all programming errors, they can ensure that neither expected or unexpected exceptions will cause partially-completed work to remain in the database.

A classic example in pseudo code is the following:

[06] function TransferMoney(fromAccount,toAccount,amount) {

[07] try {

[08] BeginTransaction();

[09] ChangeBalance(toAccount,amount);

[10] ... something throws exception here ...

[11] ChangeBalance(fromAccount,-amount);

[12] CommitTransaction();

[13] } catch(Exception e) {

[14] RollbackTransaction();

[15] }

[16] }

In this (simplified) example, we’re transferring money from one bank account to another. Potentially an exception thrown at line [05] could be serious, since it would cause money to appear in toAccount without it being removed from fromAccount. It’s bad enough if this happens by accident, but a clever cracker who finds a way to cause an exception at line [05] has discovered a way to steal money from the bank.

Fortunately we’re doing this financial transaction inside a database transaction. Everything done after BeginTransaction() is provisional: it doesn’t actually appear in the database until CommitTransaction() is called. When an exception happens, we call RollbackTransaction(), which makes it as if the first ChangeBalance() had never been called.

As mentioned in the “Don’t Catch Exceptions” article, it often makes sense to do release, rollback and repairing operations in a finally clause rather than the unit-of-work catch clause because it lets an individual subsystem take care of itself — this promotes encapsulation. However, in applications that use databases transactionally, it often makes sense to push transaction management out the the work unit.

Why? Complex database operations are often composed out of simpler database operations that, themselves, should be done transactionally. To take an example, imagine that somebody is opening a new account and funding it from an existing account:

[17] function OpenAndFundNewAccount(accountInformation,oldAccount,amount) {

[18] if (amount<MinimumAmount) {

[19] throw new InvalidInputException(

[20] "Attempted To Create Account With Balance Below Minimum"

[21] );

[22] }

[23] newAccount=CreateNewAccountRecords(accountInformation);

[24] TransferMoney(oldAccount,newAccount,amount);|

[25] }

It’s important that the TransferMoney operation be done transactionally, but it’s also important that the whole OpenAndFundNewAccount operation be done transactionally too, because we don’t want an account in the system to start with a zero balance.

A straightforward answer to this problem is to always do banking operations inside a unit of work, and to begin, commit and roll back transactions at the work unit level:

[26] AtmOutput ProcessAtmRequest(AtmInput in) {

[27] try {

[28] BeginTransaction();

[29] BankingOperation op=AtmInput.ParseOperation();

[30] var out=op.Execute();

[31] var atmOut=AtmOutput.Encode(out);

[32] CommitTransaction();

[33] return atmOut;

[34] }

[35] catch(Exception e) {

[36] RollbackTransaction();

[37] ... Complete Error Handling ...

[38] }

In this case, there might be a large number of functions that are used to manipulate the database internally, but these are only accessable to customers and bank tellers through a limited set of BankingOperations that are always executed in a transaction.

Notification

There are several parties that could be notified when something goes wrong with an application, most commonly:

- the end user,

- the system administrator, and

- the developers.

Sometimes, as in the case of a public-facing web application, #2 and #3 may overlap. In desktop applications, #2 might not exist.

Let’s consider the end user first. The end user really needs to know (i) that something went wrong, and (ii) what they can do about it. Often errors are caused by user input: hopefully these errors are expected, so the system can tell the user specifically what went wrong: for instance,

[39] try {

[40] ... process form information ...

[41]

[42] if (!IsWellFormedSSN(ssn))

[43] throw new InvalidInputException("You must supply a valid social security number");

[44]

[45] ... process form some more ...

[46] } catch(InvalidInputException e) {

[47] DisplayError(e.Message);

[48] }

other times, errors happen that are unexpected. Consider a common (and bad) practice that we see in database applications: programs that write queries without correctly escaping strings:

[49] dbConn.Execute("

[50] INSERT INTO people (first_name,last_name)

[51] VALUES ('"+firstName+"','+lastName+"');

[52] ");

this code is straightforward, but dangerous, because a single quote in the firstName or lastName variable ends the string literal in the VALUES clause, and enables an SQL injection attack. (I’d hope that you know better than than to do this, but large projects worked on by large teams inevitably have problems of this order.) This code might even hold up well in testing, failing only in production when a person registers with

[53] lastName="O'Reilly";

Now, the dbConn is going to throw something like a SqlException with the following message:

[54] SqlException.Message="Invalid SQL Statement:

[55] INSERT INTO people (first_name,last_name)

[56] VALUES ('Baba','O'Reilly');"

we could show that message to the end user, but that message is worthless to most people. Worse than that, it’s harmful if the end user is a cracker who could take advantage of the error — it tells them the name of the affected table, the names of the columns, and the exact SQL code that they can inject something into. You might be better off showing users something like:

and telling them that they’ve experienced an “Internal Server Error.” Even so, the discovery that a single quote can cause an “Internal Server Error” can be enough for a good cracker to sniff out the fault and develop an attack in the blind.. What can we do? Warn the system administrators. The error handling system for a server application should log exceptions, stack trace and all. It doesn’t matter if you use the UNIX syslog mechanism, the logging service in Windows NT, or something that’s built into your server, like Apache’s error_log. Although logging systems are built into both Java and .Net, many developers find that Log4J and Log4N are especially effective.

There really are two ways to use logs:

- Detailed logging information is useful for debugging problems after the fact. For instance, if a user reports a problem, you can look in the logs to understand the origin of the problem, making it easy to debug problems that occur rarely: this can save hours of time trying to understand the exact problem a user is experiencing.

- A second approach to logs is proactive: to regularly look a logs to detect problems before they get reported. In the example above, the SqlException would probably first be thrown by an innocent person who has an apostrophe in his or her name — if the error was detected that day and quickly fixed, a potential security hole could be fixed long before it would be exploited. Organizaitons that investigate all exceptions thrown by production web applications run the most secure and reliable applications.

In the last decade it’s become quite common for desktop applications to send stack traces back to the developers after a crash: usually they pop up a dialog box that asks for permission first. Although developers of desktop applications can’t be as proactive as maintainers of server applications, this is a useful tool for discovering errors that escape testing, and to discover how commonly they occur in the field.

Retry I: Do it again!

Some errors are transient: that is, if you try to do the same operation later, the operation may succeed. Here are a few common cases:

- An attempt to write to a DVD-R could fail because the disk is missing from the drive

- A database transaction could fail when you commit it because of a conflict with another transaction: an attempt to do the transaction again could succeed

- An attempt to deliver a mail message could fail because of problems with the network or destination mail server

- A web crawler that crawls thousands (or millions) of sites will find that many of them are down at any given time: it needs to deal with this reasonably, rather than drop your site from it’s index because it happened to be down for a few hours

Transient errors are commonly associated with the internet and with remote servers; errors are frequent because of the complexity of the internet, but they’re transitory because problems are repaired by both automatic and human intervention. For instance, if a hardware failure causes a remote web or email server to go down, it’s likely that somebody is going to notice the problem and fix it in a few hours or days.

One strategy for dealing with transient errors is to punt it back to the user: in a case like this, we display an error message that tells the user that the problem might clear up if they retry the operation. This is implicit in how web browsers work: sometimes you try to visit a web page, you get an error message, then you hit reload and it’s all OK. This strategy is particularly effective when the user could be aware that there’s a problem with their internet connection and could do something about it: for instance, they might discover that they’ve moved their laptop out of Wi-Fi range, or that the DSL connection at their house has gone down for the weekend.

SMTP, the internet protocol for email, is one of the best examples of automated retry. Compliant e-mail servers store outgoing mail in a queue: if an attempt to send mail to a destination server fails, mail will stay in the queue for several days before reporting failure to the user. Section 4.5.4 of RFC 2821 states:

The sender MUST delay retrying a particular destination after one attempt has failed. In general, the retry interval SHOULD be at least 30 minutes; however, more sophisticated and variable strategies will be beneficial when the SMTP client can determine the reason for non-delivery. Retries continue until the message is transmitted or the sender gives up; the give-up time generally needs to be at least 4-5 days. The parameters to the retry algorithm MUST be configurable. A client SHOULD keep a list of hosts it cannot reach and corresponding connection timeouts, rather than just retrying queued mail items. Experience suggests that failures are typically transient (the target system or its connection has crashed), favoring a policy of two connection attempts in the first hour the message is in the queue, and then backing off to one every two or three hours.

Practical mail servers use fsync() and other mechanisms to implement transactional semantics on the queue: the needs of reliability make it expensive to run an SMTP-compliant server, so e-mail spammers often use non-compliant servers that don’t correctly retry (if they’re going to send you 20 copies of the message anyway, who cares if only 15 get through?) Greylisting is a highly effective filtering strategy that tests the compliance of SMTP senders by forcing a retry.

Retry II: If first you don’t succeed…

An alternate form of retry is to try something different. For instance, many programs in the UNIX environment will look in many different places for a configuration file: if the file isn’t in the first place tried, it will try the second place and so forth.

The online e-print server at arXiv.org has a system called AutoTex which automatically converts documents written in several dialects of TeX and LaTeX into Postscript and PDF files. AutoTex unpacks the files in a submission into a directory and uses chroot to run the document processing tools in a protected sandbox. It tries about of ten different configurations until it finds one that successfully compiles the document.

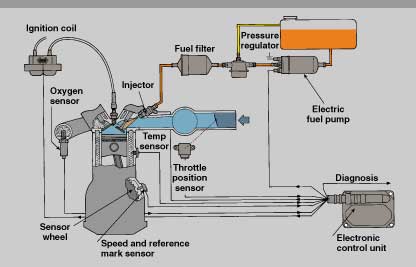

In embedded applications, where availability is important, it’s common to fall back to a “safe mode” when normal operation is impossible. The Engine Control Unit in a modern car is a good example:

Since the 1970′s, regulations in the United States have reduced emissions of hydrocarbons and nitrogen oxides from passenger automobiles by more than a hundred fold. The technology has many aspects, but the core of the system in an Engine Control Unit that uses a collection of sensors to monitor the state of the engine and uses this information to adjust engine parameters (such as the quantity of fuel injected) to balance performance and fuel economy with environmental compliance.

As the condition of the engine, driving conditions and composition of fuel change over the time, the ECU normally operates in a “closed-loop” mode that continually optimizes performance. When part of the system fails (for instance, the oxygen sensor) the ECU switches to an “open-loop” mode. Rather than leaving you stranded, it lights the “check engine” indicator and operates the engine with conservative assumptions that will get you home and to a repair shop.

Ignore?

One strength of exceptions, compared to the older return-value method of error handling is that the default behavior of an exception is to abort, not to ignore. In general, that’s good, but there are a few cases where “ignore” is the best option. Ignoring an error makes sense when:

- Security is not at stake, and

- there’s no alternative action available, and

- the consequences of an abort are worse than the consequences of avoiding an error

The first rule is important, because crackers will take advantage of system faults to attack a system. Imagine, for instance, a “smart card” chip embedded in a payment card. People have successfully extracted information from smart cards by fault injection: this could be anything from a power dropout to a bright flash of light on an exposed silicon surface. If you’re concerned that a system will be abused, it’s probably best to shut down when abnormal conditions are detected.

On the other hand, some operations are vestigial to an application. Imagine, for instance, a dialog box that pops when an application crashes that offers the user the choice of sending a stack trace to the vendor. If the attempt to send the stack trace fails, it’s best to ignore the failure — there’s no point in subjecting the user to an endless series of dialog boxes.

“Ignoring” often makes sense in the applications that matter the most and those that matter the least.

For instance, media players and video games operate in a hostile environment where disks, the network, sound and controller hardware are uncooperative. The “unit of work” could be the rendering of an individual frame: it’s appropriate for entertainment devices to soldier on despite hardware defects, unplugged game controllers, network dropouts and corrupted inputs, since the consequences of failure are no worse than shutting the system down.

In the opposite case, high-value systems and high-risk should continue functioning no matter what happen. The software for a space probe, for instance, should never give up. Much like an automotive ECU, space probes default to a “safe mode” when contact with the earth is lost: frequently this strategy involves one or more reboots, but the goal is to always regain contact with controllers so that the mission has a chance at success.

Conclusion

It’s most practical to catch exceptions at the boundaries of relatively coarse “units of work.” Although the handling of errors usually involves some amount of rollback (restoring system state) and notification of affected people, the ultimate choices are still what they were in the days of DOS: abort, retry, or ignore.

Correct handling of an error requires some thought about the cause of an error: was it caused by bad input, corrupted application state, or a transient network failure? It’s also important to understand the impact the error has on the application state and to try to reduce it using mechanisms such as database transactions.

“Abort” is a logical choice when an error is likely to have caused corruption of the application state, or if an error was probably caused by a corrupted state. Applications that depend on network communications sometimes must “Retry” operations when they are interrupted by network failures. Another form of “Retry” is to try a different approach to an operation when the first approach fails. Finally, “Ignore” is appropriate when “Retry” isn’t available and the cost of “Abort” is worse than soldiering on.

This article is one of a series on error handling. The next article in this series will describe practices for defining and throwing exceptions that gives exception handlers good information for making decisions. Subscribers to our RSS Feed will be the first to read it.

]]>I got started with relational databases with mysql, so I’m in the habit of making database changes with SQL scripts, rather than using a GUI. Microsoft SQL Server requires that we specify the name of a unique constraint when we want to drop it. If you’re thinking ahead, you can specify a name when you create the constraint; if you don’t, SQL Server will make up an unpredictable name, so you can’t write a simple script to drop the constraint.

A Solution

In the spirit of “How To Drop A Primary Key in SQL Server“, here’s a stored procedure that queries the data dictionary to find the names of any unique constraint on a specific table and column and drop them:

CREATE PROCEDURE [dbo].[DropUniqueConstraint] @tableName NVarchar(255), @columnName NVarchar(255) AS DECLARE @IdxNames CURSOR SET @IdxNames = CURSOR FOR select sysindexes.name from sysindexkeys,syscolumns,sysindexes WHERE syscolumns.[id] = OBJECT_ID(N'[dbo].['+@tableName+N']') AND sysindexkeys.[id] = OBJECT_ID(N'[dbo].['+@tableName+N']') AND sysindexes.[id] = OBJECT_ID(N'[dbo].['+@tableName+N']') AND syscolumns.name=@columnName AND sysindexkeys.colid=syscolumns.colid AND sysindexes.[indid]=sysindexkeys.[indid] AND ( SELECT COUNT(*) FROM sysindexkeys AS si2 WHERE si2.id=sysindexes.id AND si2.indid=sysindexes.indid )=1

OPEN @IdxNames DECLARE @IdxName Nvarchar(255) FETCH NEXT FROM @IdxNames INTO @IdxName WHILE @@FETCH_STATUS = 0 BEGIN DECLARE @dropSql Nvarchar(4000) SET @dropSql= N'ALTER TABLE ['+@tableName+ N'] DROP CONSTRAINT ['+@IdxName+ N']' EXEC(@dropSql) FETCH NEXT FROM @IdxNames INTO @IdxName END CLOSE @IdxNames DEALLOCATE @IdxNames

Usage is straightforward:

EXEC [dbo].[DropUniqueConstraint] @tableName='TargetTable', @columnName='TargetColumn'

This script has a limitation: it only drops unique constraints that act on a single column, not constraints that act on multiple columns. It is smart enough, however, to not drop multiple-column constraints in case one of them involves @columnName.

Feedback from SQL stored procedure wizards would be mostly certainly welcome.

]]>ALTER TABLE [dbo].[someTable] DROP CONSTRAINT [PK__someTabl__3214EC07271AA44F]

It’s less convenient, however, when you’re writing a set of migration scripts in SQL to implement changes that you make over the database in time. Specifically, if you create the table twice in two different databases, the hexadecimal string in the name of the key will be different — the ALTER TABLE statement will fail when you try to drop the index later, since the name of the key won’t match.

Here’s a stored procedure that looks up the name of the primary key in the system catalog and uses dynamic SQL to drop the index:

CREATE PROCEDURE [dbo].[DropPrimaryKey]

@tableName Varchar(255)

AS

/*

Drop the primary key on @TableName

http://gen5.info/q/

Version 1.1

June 9, 2008

*/

DECLARE @pkName Varchar(255)

SET @pkName= (

SELECT [name] FROM sysobjects

WHERE [xtype] = 'PK'

AND [parent_obj] = OBJECT_ID(N'[dbo].['+@tableName+N']')

)

DECLARE @dropSql varchar(4000)

SET @dropSql=

'ALTER TABLE [dbo].['+@tableName+']

DROP CONSTRAINT ['+@PkName+']'

EXEC(@dropSql)

GO

Once you've loaded this stored procedure, you can write

EXEC [dbo].[DropPrimaryKey] @TableName='someTable'

It’s just that simple. Similar stored procedures can be written to convert fields from NOT NULL to NULL and do other operation which required a named constraint.

]]>Many modern languages, such as Java, Perl, PHP and C#, are derived from C. In C, string literals are written in double quotes, and a set of double quotes can’t span a line break. One advantage of this is that the compiler can give clearer error messages when you forget the close a string. Some C-like languages, such as Java and C# are like C in that double quotes can’t span multiple lines. Other languages, such as Perl and PHP, allow it. C# adds a new multiline quote operator, @”" which allows quotes to span multiple lines and removes the special treatment that C-like languages give to the backslash character (\).

The at-quote is particularly useful on the Windows platform because the backslash used as a path separator interacts badly with the behavior of quotes in C, C++ and many other languages. You need to write

String path="C:\\Program Files\\My Program\\Some File.txt";

With at-quote, you can write

String path=@"C:\Program Files\My Program\Some File.txt";

which makes the choice of path separator in MS-DOS seem like less of a bad decision.

Now, one really great thing about SQL is that the database can optimize complicated queries that have many joins and subselects — however, it’s not unusual to see people write something like

command.CommandText = "SELECT firstName,lastName,(SELECT COUNT(*) FROM comments WHERE postedBy =userId AND flag_spam='n') AS commentCount,userName,city,state,gender,birthdate FROM user,user Location,userDemographics WHERE user.userId=userLocation.userId AND user.userId=userDemographi cs.user_id AND (SELECT COUNT(*) FROM friendsReferred WHERE referringUser=userId)>10 ORDER BY c ommentCount HAVING commentCount>3";

(line-wrapped C# example) Complex queries like this can be excellent, because they can run thousands of times faster than loops written in procedural or OO languages which can fire off hundreds of queries, but a query written like the one above is a bit unfair to the person who has to maintain it.

With multiline quotes, you can continue the indentation of your code into your SQL:

command.CommandText = @"

SELECT

firstName

,lastName

,(SELECT COUNT(*) FROM comments

WHERE postedBy=userId AND flag_spam='n') AS commentCount

,userName

,city

,state

,gender

,birthdate

FROM user,userLocation,userDemographics

WHERE

user.userId=userLocation.userId

AND user.userId=userDemographics.user_id

AND (SELECT COUNT(*) FROM friendsReferred

WHERE referringUser=userId)>10

ORDER BY commentCount

HAVING commentCount>3

";

Although this might not be quite quite as cool as LINQ, it works with Mysql, Microsoft Access or any database you need to connect to — and it works in many other languages, such as PHP (in which you could use ordinary double quotes.) In languages like Java that don’t support multiline quotes, you can always write

String query = " SELECT" +" firstName" +" ,lastName" +" ,(SELECT COUNT(*) FROM comments" +" WHERE postedBy=userId AND flag_spam='n') AS commentCount" +" ,userName" +" ,city" +" ,state" +" ,gender" +" ,birthdate" +" FROM user,userLocation,userDemographics" +" WHERE" +" user.userId=userLocation.userId" +" AND user.userId=userDemographics.user_id" +" AND (SELECT COUNT(*) FROM friendsReferred" +" WHERE referringUser=userId)>10" +" ORDER BY commentCount" +" HAVING commentCount>3";

but that’s a bit more cumbersome and error prone.

]]>